Auto merge of #70793 - the8472:in-place-iter-collect, r=Amanieu

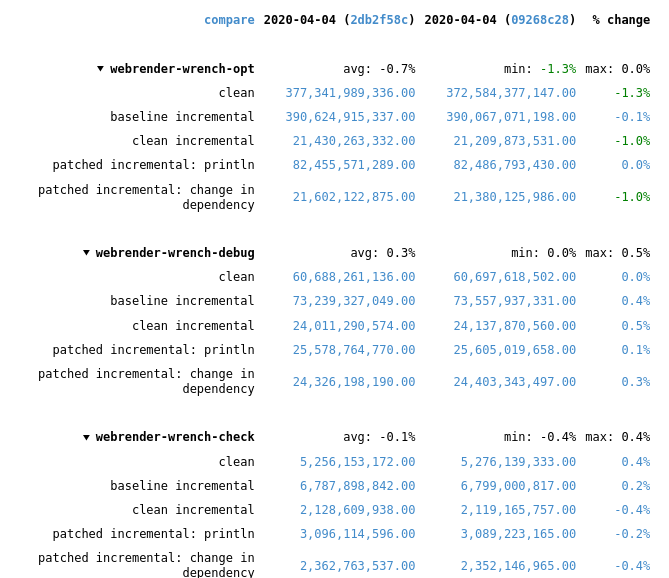

specialize some collection and iterator operations to run in-place This is a rebase and update of #66383 which was closed due inactivity. Recent rustc changes made the compile time regressions disappear, at least for webrender-wrench. Running a stage2 compile and the rustc-perf suite takes hours on the hardware I have at the moment, so I can't do much more than that.  In the best case of the `vec::bench_in_place_recycle` synthetic microbenchmark these optimizations can provide a 15x speedup over the regular implementation which allocates a new vec for every benchmark iteration. [Benchmark results](https://gist.github.com/the8472/6d999b2d08a2bedf3b93f12112f96e2f). In real code the speedups are tiny, but it also depends on the allocator used, a system allocator that uses a process-wide mutex will benefit more than one with thread-local pools. ## What was changed * `SpecExtend` which covered `from_iter` and `extend` specializations was split into separate traits * `extend` and `from_iter` now reuse the `append_elements` if passed iterators are from slices. * A preexisting `vec.into_iter().collect::<Vec<_>>()` optimization that passed through the original vec has been generalized further to also cover cases where the original has been partially drained. * A chain of *Vec<T> / BinaryHeap<T> / Box<[T]>* `IntoIter`s through various iterator adapters collected into *Vec<U>* and *BinaryHeap<U>* will be performed in place as long as `T` and `U` have the same alignment and size and aren't ZSTs. * To enable above specialization the unsafe, unstable `SourceIter` and `InPlaceIterable` traits have been added. The first allows reaching through the iterator pipeline to grab a pointer to the source memory. The latter is a marker that promises that the read pointer will advance as fast or faster than the write pointer and thus in-place operation is possible in the first place. * `vec::IntoIter` implements `TrustedRandomAccess` for `T: Copy` to allow in-place collection when there is a `Zip` adapter in the iterator. TRA had to be made an unstable public trait to support this. ## In-place collectible adapters * `Map` * `MapWhile` * `Filter` * `FilterMap` * `Fuse` * `Skip` * `SkipWhile` * `Take` * `TakeWhile` * `Enumerate` * `Zip` (left hand side only, `Copy` types only) * `Peek` * `Scan` * `Inspect` ## Concerns `vec.into_iter().filter(|_| false).collect()` will no longer return a vec with 0 capacity, instead it will return its original allocation. This avoids the cost of doing any allocation or deallocation but could lead to large allocations living longer than expected. If that's not acceptable some resizing policy at the end of the attempted in-place collect would be necessary, which in the worst case could result in one more memcopy than the non-specialized case. ## Possible followup work * split liballoc/vec.rs to remove `ignore-tidy-filelength` * try to get trivial chains such as `vec.into_iter().skip(1).collect::<Vec<)>>()` to compile to a `memmove` (currently compiles to a pile of SIMD, see #69187 ) * improve up the traits so they can be reused by other crates, e.g. itertools. I think currently they're only good enough for internal use * allow iterators sourced from a `HashSet` to be in-place collected into a `Vec`

This commit is contained in:

commit

0d0f6b1130

|

|

@ -1,5 +1,6 @@

|

|||

use rand::prelude::*;

|

||||

use std::iter::{repeat, FromIterator};

|

||||

use test::Bencher;

|

||||

use test::{black_box, Bencher};

|

||||

|

||||

#[bench]

|

||||

fn bench_new(b: &mut Bencher) {

|

||||

|

|

@ -7,6 +8,7 @@ fn bench_new(b: &mut Bencher) {

|

|||

let v: Vec<u32> = Vec::new();

|

||||

assert_eq!(v.len(), 0);

|

||||

assert_eq!(v.capacity(), 0);

|

||||

v

|

||||

})

|

||||

}

|

||||

|

||||

|

|

@ -17,6 +19,7 @@ fn do_bench_with_capacity(b: &mut Bencher, src_len: usize) {

|

|||

let v: Vec<u32> = Vec::with_capacity(src_len);

|

||||

assert_eq!(v.len(), 0);

|

||||

assert_eq!(v.capacity(), src_len);

|

||||

v

|

||||

})

|

||||

}

|

||||

|

||||

|

|

@ -47,6 +50,7 @@ fn do_bench_from_fn(b: &mut Bencher, src_len: usize) {

|

|||

let dst = (0..src_len).collect::<Vec<_>>();

|

||||

assert_eq!(dst.len(), src_len);

|

||||

assert!(dst.iter().enumerate().all(|(i, x)| i == *x));

|

||||

dst

|

||||

})

|

||||

}

|

||||

|

||||

|

|

@ -77,6 +81,7 @@ fn do_bench_from_elem(b: &mut Bencher, src_len: usize) {

|

|||

let dst: Vec<usize> = repeat(5).take(src_len).collect();

|

||||

assert_eq!(dst.len(), src_len);

|

||||

assert!(dst.iter().all(|x| *x == 5));

|

||||

dst

|

||||

})

|

||||

}

|

||||

|

||||

|

|

@ -109,6 +114,7 @@ fn do_bench_from_slice(b: &mut Bencher, src_len: usize) {

|

|||

let dst = src.clone()[..].to_vec();

|

||||

assert_eq!(dst.len(), src_len);

|

||||

assert!(dst.iter().enumerate().all(|(i, x)| i == *x));

|

||||

dst

|

||||

});

|

||||

}

|

||||

|

||||

|

|

@ -141,6 +147,7 @@ fn do_bench_from_iter(b: &mut Bencher, src_len: usize) {

|

|||

let dst: Vec<_> = FromIterator::from_iter(src.clone());

|

||||

assert_eq!(dst.len(), src_len);

|

||||

assert!(dst.iter().enumerate().all(|(i, x)| i == *x));

|

||||

dst

|

||||

});

|

||||

}

|

||||

|

||||

|

|

@ -175,6 +182,7 @@ fn do_bench_extend(b: &mut Bencher, dst_len: usize, src_len: usize) {

|

|||

dst.extend(src.clone());

|

||||

assert_eq!(dst.len(), dst_len + src_len);

|

||||

assert!(dst.iter().enumerate().all(|(i, x)| i == *x));

|

||||

dst

|

||||

});

|

||||

}

|

||||

|

||||

|

|

@ -224,9 +232,24 @@ fn do_bench_extend_from_slice(b: &mut Bencher, dst_len: usize, src_len: usize) {

|

|||

dst.extend_from_slice(&src);

|

||||

assert_eq!(dst.len(), dst_len + src_len);

|

||||

assert!(dst.iter().enumerate().all(|(i, x)| i == *x));

|

||||

dst

|

||||

});

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_extend_recycle(b: &mut Bencher) {

|

||||

let mut data = vec![0; 1000];

|

||||

|

||||

b.iter(|| {

|

||||

let tmp = std::mem::replace(&mut data, Vec::new());

|

||||

let mut to_extend = black_box(Vec::new());

|

||||

to_extend.extend(tmp.into_iter());

|

||||

data = black_box(to_extend);

|

||||

});

|

||||

|

||||

black_box(data);

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_extend_from_slice_0000_0000(b: &mut Bencher) {

|

||||

do_bench_extend_from_slice(b, 0, 0)

|

||||

|

|

@ -271,6 +294,7 @@ fn do_bench_clone(b: &mut Bencher, src_len: usize) {

|

|||

let dst = src.clone();

|

||||

assert_eq!(dst.len(), src_len);

|

||||

assert!(dst.iter().enumerate().all(|(i, x)| i == *x));

|

||||

dst

|

||||

});

|

||||

}

|

||||

|

||||

|

|

@ -305,10 +329,10 @@ fn do_bench_clone_from(b: &mut Bencher, times: usize, dst_len: usize, src_len: u

|

|||

|

||||

for _ in 0..times {

|

||||

dst.clone_from(&src);

|

||||

|

||||

assert_eq!(dst.len(), src_len);

|

||||

assert!(dst.iter().enumerate().all(|(i, x)| dst_len + i == *x));

|

||||

}

|

||||

dst

|

||||

});

|

||||

}

|

||||

|

||||

|

|

@ -431,3 +455,220 @@ fn bench_clone_from_10_0100_0010(b: &mut Bencher) {

|

|||

fn bench_clone_from_10_1000_0100(b: &mut Bencher) {

|

||||

do_bench_clone_from(b, 10, 1000, 100)

|

||||

}

|

||||

|

||||

macro_rules! bench_in_place {

|

||||

(

|

||||

$($fname:ident, $type:ty , $count:expr, $init: expr);*

|

||||

) => {

|

||||

$(

|

||||

#[bench]

|

||||

fn $fname(b: &mut Bencher) {

|

||||

b.iter(|| {

|

||||

let src: Vec<$type> = black_box(vec![$init; $count]);

|

||||

let mut sink = src.into_iter()

|

||||

.enumerate()

|

||||

.map(|(idx, e)| { (idx as $type) ^ e }).collect::<Vec<$type>>();

|

||||

black_box(sink.as_mut_ptr())

|

||||

});

|

||||

}

|

||||

)+

|

||||

};

|

||||

}

|

||||

|

||||

bench_in_place![

|

||||

bench_in_place_xxu8_i0_0010, u8, 10, 0;

|

||||

bench_in_place_xxu8_i0_0100, u8, 100, 0;

|

||||

bench_in_place_xxu8_i0_1000, u8, 1000, 0;

|

||||

bench_in_place_xxu8_i1_0010, u8, 10, 1;

|

||||

bench_in_place_xxu8_i1_0100, u8, 100, 1;

|

||||

bench_in_place_xxu8_i1_1000, u8, 1000, 1;

|

||||

bench_in_place_xu32_i0_0010, u32, 10, 0;

|

||||

bench_in_place_xu32_i0_0100, u32, 100, 0;

|

||||

bench_in_place_xu32_i0_1000, u32, 1000, 0;

|

||||

bench_in_place_xu32_i1_0010, u32, 10, 1;

|

||||

bench_in_place_xu32_i1_0100, u32, 100, 1;

|

||||

bench_in_place_xu32_i1_1000, u32, 1000, 1;

|

||||

bench_in_place_u128_i0_0010, u128, 10, 0;

|

||||

bench_in_place_u128_i0_0100, u128, 100, 0;

|

||||

bench_in_place_u128_i0_1000, u128, 1000, 0;

|

||||

bench_in_place_u128_i1_0010, u128, 10, 1;

|

||||

bench_in_place_u128_i1_0100, u128, 100, 1;

|

||||

bench_in_place_u128_i1_1000, u128, 1000, 1

|

||||

];

|

||||

|

||||

#[bench]

|

||||

fn bench_in_place_recycle(b: &mut test::Bencher) {

|

||||

let mut data = vec![0; 1000];

|

||||

|

||||

b.iter(|| {

|

||||

let tmp = std::mem::replace(&mut data, Vec::new());

|

||||

data = black_box(

|

||||

tmp.into_iter()

|

||||

.enumerate()

|

||||

.map(|(idx, e)| idx.wrapping_add(e))

|

||||

.fuse()

|

||||

.peekable()

|

||||

.collect::<Vec<usize>>(),

|

||||

);

|

||||

});

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_in_place_zip_recycle(b: &mut test::Bencher) {

|

||||

let mut data = vec![0u8; 1000];

|

||||

let mut rng = rand::thread_rng();

|

||||

let mut subst = vec![0u8; 1000];

|

||||

rng.fill_bytes(&mut subst[..]);

|

||||

|

||||

b.iter(|| {

|

||||

let tmp = std::mem::replace(&mut data, Vec::new());

|

||||

let mangled = tmp

|

||||

.into_iter()

|

||||

.zip(subst.iter().copied())

|

||||

.enumerate()

|

||||

.map(|(i, (d, s))| d.wrapping_add(i as u8) ^ s)

|

||||

.collect::<Vec<_>>();

|

||||

assert_eq!(mangled.len(), 1000);

|

||||

data = black_box(mangled);

|

||||

});

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_in_place_zip_iter_mut(b: &mut test::Bencher) {

|

||||

let mut data = vec![0u8; 256];

|

||||

let mut rng = rand::thread_rng();

|

||||

let mut subst = vec![0u8; 1000];

|

||||

rng.fill_bytes(&mut subst[..]);

|

||||

|

||||

b.iter(|| {

|

||||

data.iter_mut().enumerate().for_each(|(i, d)| {

|

||||

*d = d.wrapping_add(i as u8) ^ subst[i];

|

||||

});

|

||||

});

|

||||

|

||||

black_box(data);

|

||||

}

|

||||

|

||||

#[derive(Clone)]

|

||||

struct Droppable(usize);

|

||||

|

||||

impl Drop for Droppable {

|

||||

fn drop(&mut self) {

|

||||

black_box(self);

|

||||

}

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_in_place_collect_droppable(b: &mut test::Bencher) {

|

||||

let v: Vec<Droppable> = std::iter::repeat_with(|| Droppable(0)).take(1000).collect();

|

||||

b.iter(|| {

|

||||

v.clone()

|

||||

.into_iter()

|

||||

.skip(100)

|

||||

.enumerate()

|

||||

.map(|(i, e)| Droppable(i ^ e.0))

|

||||

.collect::<Vec<_>>()

|

||||

})

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_chain_collect(b: &mut test::Bencher) {

|

||||

let data = black_box([0; LEN]);

|

||||

b.iter(|| data.iter().cloned().chain([1].iter().cloned()).collect::<Vec<_>>());

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_chain_chain_collect(b: &mut test::Bencher) {

|

||||

let data = black_box([0; LEN]);

|

||||

b.iter(|| {

|

||||

data.iter()

|

||||

.cloned()

|

||||

.chain([1].iter().cloned())

|

||||

.chain([2].iter().cloned())

|

||||

.collect::<Vec<_>>()

|

||||

});

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_nest_chain_chain_collect(b: &mut test::Bencher) {

|

||||

let data = black_box([0; LEN]);

|

||||

b.iter(|| {

|

||||

data.iter().cloned().chain([1].iter().chain([2].iter()).cloned()).collect::<Vec<_>>()

|

||||

});

|

||||

}

|

||||

|

||||

pub fn example_plain_slow(l: &[u32]) -> Vec<u32> {

|

||||

let mut result = Vec::with_capacity(l.len());

|

||||

result.extend(l.iter().rev());

|

||||

result

|

||||

}

|

||||

|

||||

pub fn map_fast(l: &[(u32, u32)]) -> Vec<u32> {

|

||||

let mut result = Vec::with_capacity(l.len());

|

||||

for i in 0..l.len() {

|

||||

unsafe {

|

||||

*result.get_unchecked_mut(i) = l[i].0;

|

||||

result.set_len(i);

|

||||

}

|

||||

}

|

||||

result

|

||||

}

|

||||

|

||||

const LEN: usize = 16384;

|

||||

|

||||

#[bench]

|

||||

fn bench_range_map_collect(b: &mut test::Bencher) {

|

||||

b.iter(|| (0..LEN).map(|_| u32::default()).collect::<Vec<_>>());

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_chain_extend_ref(b: &mut test::Bencher) {

|

||||

let data = black_box([0; LEN]);

|

||||

b.iter(|| {

|

||||

let mut v = Vec::<u32>::with_capacity(data.len() + 1);

|

||||

v.extend(data.iter().chain([1].iter()));

|

||||

v

|

||||

});

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_chain_extend_value(b: &mut test::Bencher) {

|

||||

let data = black_box([0; LEN]);

|

||||

b.iter(|| {

|

||||

let mut v = Vec::<u32>::with_capacity(data.len() + 1);

|

||||

v.extend(data.iter().cloned().chain(Some(1)));

|

||||

v

|

||||

});

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_rev_1(b: &mut test::Bencher) {

|

||||

let data = black_box([0; LEN]);

|

||||

b.iter(|| {

|

||||

let mut v = Vec::<u32>::new();

|

||||

v.extend(data.iter().rev());

|

||||

v

|

||||

});

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_rev_2(b: &mut test::Bencher) {

|

||||

let data = black_box([0; LEN]);

|

||||

b.iter(|| example_plain_slow(&data));

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_map_regular(b: &mut test::Bencher) {

|

||||

let data = black_box([(0, 0); LEN]);

|

||||

b.iter(|| {

|

||||

let mut v = Vec::<u32>::new();

|

||||

v.extend(data.iter().map(|t| t.1));

|

||||

v

|

||||

});

|

||||

}

|

||||

|

||||

#[bench]

|

||||

fn bench_map_fast(b: &mut test::Bencher) {

|

||||

let data = black_box([(0, 0); LEN]);

|

||||

b.iter(|| map_fast(&data));

|

||||

}

|

||||

|

|

|

|||

|

|

@ -145,13 +145,13 @@

|

|||

#![stable(feature = "rust1", since = "1.0.0")]

|

||||

|

||||

use core::fmt;

|

||||

use core::iter::{FromIterator, FusedIterator, TrustedLen};

|

||||

use core::iter::{FromIterator, FusedIterator, InPlaceIterable, SourceIter, TrustedLen};

|

||||

use core::mem::{self, size_of, swap, ManuallyDrop};

|

||||

use core::ops::{Deref, DerefMut};

|

||||

use core::ptr;

|

||||

|

||||

use crate::slice;

|

||||

use crate::vec::{self, Vec};

|

||||

use crate::vec::{self, AsIntoIter, Vec};

|

||||

|

||||

use super::SpecExtend;

|

||||

|

||||

|

|

@ -1173,6 +1173,27 @@ impl<T> ExactSizeIterator for IntoIter<T> {

|

|||

#[stable(feature = "fused", since = "1.26.0")]

|

||||

impl<T> FusedIterator for IntoIter<T> {}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<T> SourceIter for IntoIter<T> {

|

||||

type Source = IntoIter<T>;

|

||||

|

||||

#[inline]

|

||||

unsafe fn as_inner(&mut self) -> &mut Self::Source {

|

||||

self

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<I> InPlaceIterable for IntoIter<I> {}

|

||||

|

||||

impl<I> AsIntoIter for IntoIter<I> {

|

||||

type Item = I;

|

||||

|

||||

fn as_into_iter(&mut self) -> &mut vec::IntoIter<Self::Item> {

|

||||

&mut self.iter

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(feature = "binary_heap_into_iter_sorted", issue = "59278")]

|

||||

#[derive(Clone, Debug)]

|

||||

pub struct IntoIterSorted<T> {

|

||||

|

|

|

|||

|

|

@ -99,6 +99,7 @@

|

|||

#![feature(fmt_internals)]

|

||||

#![feature(fn_traits)]

|

||||

#![feature(fundamental)]

|

||||

#![feature(inplace_iteration)]

|

||||

#![feature(internal_uninit_const)]

|

||||

#![feature(lang_items)]

|

||||

#![feature(layout_for_ptr)]

|

||||

|

|

@ -106,6 +107,7 @@

|

|||

#![feature(map_first_last)]

|

||||

#![feature(map_into_keys_values)]

|

||||

#![feature(negative_impls)]

|

||||

#![feature(never_type)]

|

||||

#![feature(new_uninit)]

|

||||

#![feature(nll)]

|

||||

#![feature(nonnull_slice_from_raw_parts)]

|

||||

|

|

@ -133,7 +135,9 @@

|

|||

#![feature(slice_partition_dedup)]

|

||||

#![feature(maybe_uninit_extra, maybe_uninit_slice)]

|

||||

#![feature(alloc_layout_extra)]

|

||||

#![feature(trusted_random_access)]

|

||||

#![feature(try_trait)]

|

||||

#![feature(type_alias_impl_trait)]

|

||||

#![feature(associated_type_bounds)]

|

||||

// Allow testing this library

|

||||

|

||||

|

|

|

|||

|

|

@ -58,7 +58,9 @@ use core::cmp::{self, Ordering};

|

|||

use core::fmt;

|

||||

use core::hash::{Hash, Hasher};

|

||||

use core::intrinsics::{arith_offset, assume};

|

||||

use core::iter::{FromIterator, FusedIterator, TrustedLen};

|

||||

use core::iter::{

|

||||

FromIterator, FusedIterator, InPlaceIterable, SourceIter, TrustedLen, TrustedRandomAccess,

|

||||

};

|

||||

use core::marker::PhantomData;

|

||||

use core::mem::{self, ManuallyDrop, MaybeUninit};

|

||||

use core::ops::Bound::{Excluded, Included, Unbounded};

|

||||

|

|

@ -2012,7 +2014,7 @@ impl<T, I: SliceIndex<[T]>> IndexMut<I> for Vec<T> {

|

|||

impl<T> FromIterator<T> for Vec<T> {

|

||||

#[inline]

|

||||

fn from_iter<I: IntoIterator<Item = T>>(iter: I) -> Vec<T> {

|

||||

<Self as SpecExtend<T, I::IntoIter>>::from_iter(iter.into_iter())

|

||||

<Self as SpecFromIter<T, I::IntoIter>>::from_iter(iter.into_iter())

|

||||

}

|

||||

}

|

||||

|

||||

|

|

@ -2094,13 +2096,39 @@ impl<T> Extend<T> for Vec<T> {

|

|||

}

|

||||

}

|

||||

|

||||

// Specialization trait used for Vec::from_iter and Vec::extend

|

||||

trait SpecExtend<T, I> {

|

||||

/// Specialization trait used for Vec::from_iter

|

||||

///

|

||||

/// ## The delegation graph:

|

||||

///

|

||||

/// ```text

|

||||

/// +-------------+

|

||||

/// |FromIterator |

|

||||

/// +-+-----------+

|

||||

/// |

|

||||

/// v

|

||||

/// +-+-------------------------------+ +---------------------+

|

||||

/// |SpecFromIter +---->+SpecFromIterNested |

|

||||

/// |where I: | | |where I: |

|

||||

/// | Iterator (default)----------+ | | Iterator (default) |

|

||||

/// | vec::IntoIter | | | TrustedLen |

|

||||

/// | SourceIterMarker---fallback-+ | | |

|

||||

/// | slice::Iter | | |

|

||||

/// | Iterator<Item = &Clone> | +---------------------+

|

||||

/// +---------------------------------+

|

||||

///

|

||||

/// ```

|

||||

trait SpecFromIter<T, I> {

|

||||

fn from_iter(iter: I) -> Self;

|

||||

fn spec_extend(&mut self, iter: I);

|

||||

}

|

||||

|

||||

impl<T, I> SpecExtend<T, I> for Vec<T>

|

||||

/// Another specialization trait for Vec::from_iter

|

||||

/// necessary to manually prioritize overlapping specializations

|

||||

/// see [`SpecFromIter`] for details.

|

||||

trait SpecFromIterNested<T, I> {

|

||||

fn from_iter(iter: I) -> Self;

|

||||

}

|

||||

|

||||

impl<T, I> SpecFromIterNested<T, I> for Vec<T>

|

||||

where

|

||||

I: Iterator<Item = T>,

|

||||

{

|

||||

|

|

@ -2122,10 +2150,224 @@ where

|

|||

vector

|

||||

}

|

||||

};

|

||||

// must delegate to spec_extend() since extend() itself delegates

|

||||

// to spec_from for empty Vecs

|

||||

<Vec<T> as SpecExtend<T, I>>::spec_extend(&mut vector, iterator);

|

||||

vector

|

||||

}

|

||||

}

|

||||

|

||||

impl<T, I> SpecFromIterNested<T, I> for Vec<T>

|

||||

where

|

||||

I: TrustedLen<Item = T>,

|

||||

{

|

||||

fn from_iter(iterator: I) -> Self {

|

||||

let mut vector = Vec::new();

|

||||

// must delegate to spec_extend() since extend() itself delegates

|

||||

// to spec_from for empty Vecs

|

||||

vector.spec_extend(iterator);

|

||||

vector

|

||||

}

|

||||

}

|

||||

|

||||

impl<T, I> SpecFromIter<T, I> for Vec<T>

|

||||

where

|

||||

I: Iterator<Item = T>,

|

||||

{

|

||||

default fn from_iter(iterator: I) -> Self {

|

||||

SpecFromIterNested::from_iter(iterator)

|

||||

}

|

||||

}

|

||||

|

||||

// A helper struct for in-place iteration that drops the destination slice of iteration,

|

||||

// i.e. the head. The source slice (the tail) is dropped by IntoIter.

|

||||

struct InPlaceDrop<T> {

|

||||

inner: *mut T,

|

||||

dst: *mut T,

|

||||

}

|

||||

|

||||

impl<T> InPlaceDrop<T> {

|

||||

fn len(&self) -> usize {

|

||||

unsafe { self.dst.offset_from(self.inner) as usize }

|

||||

}

|

||||

}

|

||||

|

||||

impl<T> Drop for InPlaceDrop<T> {

|

||||

#[inline]

|

||||

fn drop(&mut self) {

|

||||

if mem::needs_drop::<T>() {

|

||||

unsafe {

|

||||

ptr::drop_in_place(slice::from_raw_parts_mut(self.inner, self.len()));

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl<T> SpecFromIter<T, IntoIter<T>> for Vec<T> {

|

||||

fn from_iter(iterator: IntoIter<T>) -> Self {

|

||||

// A common case is passing a vector into a function which immediately

|

||||

// re-collects into a vector. We can short circuit this if the IntoIter

|

||||

// has not been advanced at all.

|

||||

// When it has been advanced We can also reuse the memory and move the data to the front.

|

||||

// But we only do so when the resulting Vec wouldn't have more unused capacity

|

||||

// than creating it through the generic FromIterator implementation would. That limitation

|

||||

// is not strictly necessary as Vec's allocation behavior is intentionally unspecified.

|

||||

// But it is a conservative choice.

|

||||

let has_advanced = iterator.buf.as_ptr() as *const _ != iterator.ptr;

|

||||

if !has_advanced || iterator.len() >= iterator.cap / 2 {

|

||||

unsafe {

|

||||

let it = ManuallyDrop::new(iterator);

|

||||

if has_advanced {

|

||||

ptr::copy(it.ptr, it.buf.as_ptr(), it.len());

|

||||

}

|

||||

return Vec::from_raw_parts(it.buf.as_ptr(), it.len(), it.cap);

|

||||

}

|

||||

}

|

||||

|

||||

let mut vec = Vec::new();

|

||||

// must delegate to spec_extend() since extend() itself delegates

|

||||

// to spec_from for empty Vecs

|

||||

vec.spec_extend(iterator);

|

||||

vec

|

||||

}

|

||||

}

|

||||

|

||||

fn write_in_place_with_drop<T>(

|

||||

src_end: *const T,

|

||||

) -> impl FnMut(InPlaceDrop<T>, T) -> Result<InPlaceDrop<T>, !> {

|

||||

move |mut sink, item| {

|

||||

unsafe {

|

||||

// the InPlaceIterable contract cannot be verified precisely here since

|

||||

// try_fold has an exclusive reference to the source pointer

|

||||

// all we can do is check if it's still in range

|

||||

debug_assert!(sink.dst as *const _ <= src_end, "InPlaceIterable contract violation");

|

||||

ptr::write(sink.dst, item);

|

||||

sink.dst = sink.dst.add(1);

|

||||

}

|

||||

Ok(sink)

|

||||

}

|

||||

}

|

||||

|

||||

/// Specialization marker for collecting an iterator pipeline into a Vec while reusing the

|

||||

/// source allocation, i.e. executing the pipeline in place.

|

||||

///

|

||||

/// The SourceIter parent trait is necessary for the specializing function to access the allocation

|

||||

/// which is to be reused. But it is not sufficient for the specialization to be valid. See

|

||||

/// additional bounds on the impl.

|

||||

#[rustc_unsafe_specialization_marker]

|

||||

trait SourceIterMarker: SourceIter<Source: AsIntoIter> {}

|

||||

|

||||

// The std-internal SourceIter/InPlaceIterable traits are only implemented by chains of

|

||||

// Adapter<Adapter<Adapter<IntoIter>>> (all owned by core/std). Additional bounds

|

||||

// on the adapter implementations (beyond `impl<I: Trait> Trait for Adapter<I>`) only depend on other

|

||||

// traits already marked as specialization traits (Copy, TrustedRandomAccess, FusedIterator).

|

||||

// I.e. the marker does not depend on lifetimes of user-supplied types. Modulo the Copy hole, which

|

||||

// several other specializations already depend on.

|

||||

impl<T> SourceIterMarker for T where T: SourceIter<Source: AsIntoIter> + InPlaceIterable {}

|

||||

|

||||

impl<T, I> SpecFromIter<T, I> for Vec<T>

|

||||

where

|

||||

I: Iterator<Item = T> + SourceIterMarker,

|

||||

{

|

||||

default fn from_iter(mut iterator: I) -> Self {

|

||||

// Additional requirements which cannot expressed via trait bounds. We rely on const eval

|

||||

// instead:

|

||||

// a) no ZSTs as there would be no allocation to reuse and pointer arithmetic would panic

|

||||

// b) size match as required by Alloc contract

|

||||

// c) alignments match as required by Alloc contract

|

||||

if mem::size_of::<T>() == 0

|

||||

|| mem::size_of::<T>()

|

||||

!= mem::size_of::<<<I as SourceIter>::Source as AsIntoIter>::Item>()

|

||||

|| mem::align_of::<T>()

|

||||

!= mem::align_of::<<<I as SourceIter>::Source as AsIntoIter>::Item>()

|

||||

{

|

||||

// fallback to more generic implementations

|

||||

return SpecFromIterNested::from_iter(iterator);

|

||||

}

|

||||

|

||||

let (src_buf, src_ptr, dst_buf, dst_end, cap) = unsafe {

|

||||

let inner = iterator.as_inner().as_into_iter();

|

||||

(

|

||||

inner.buf.as_ptr(),

|

||||

inner.ptr,

|

||||

inner.buf.as_ptr() as *mut T,

|

||||

inner.end as *const T,

|

||||

inner.cap,

|

||||

)

|

||||

};

|

||||

|

||||

// use try-fold since

|

||||

// - it vectorizes better for some iterator adapters

|

||||

// - unlike most internal iteration methods methods it only takes a &mut self

|

||||

// - it lets us thread the write pointer through its innards and get it back in the end

|

||||

let sink = InPlaceDrop { inner: dst_buf, dst: dst_buf };

|

||||

let sink = iterator

|

||||

.try_fold::<_, _, Result<_, !>>(sink, write_in_place_with_drop(dst_end))

|

||||

.unwrap();

|

||||

// iteration succeeded, don't drop head

|

||||

let dst = mem::ManuallyDrop::new(sink).dst;

|

||||

|

||||

let src = unsafe { iterator.as_inner().as_into_iter() };

|

||||

// check if SourceIter contract was upheld

|

||||

// caveat: if they weren't we may not even make it to this point

|

||||

debug_assert_eq!(src_buf, src.buf.as_ptr());

|

||||

// check InPlaceIterable contract. This is only possible if the iterator advanced the

|

||||

// source pointer at all. If it uses unchecked access via TrustedRandomAccess

|

||||

// then the source pointer will stay in its initial position and we can't use it as reference

|

||||

if src.ptr != src_ptr {

|

||||

debug_assert!(

|

||||

dst as *const _ <= src.ptr,

|

||||

"InPlaceIterable contract violation, write pointer advanced beyond read pointer"

|

||||

);

|

||||

}

|

||||

|

||||

// drop any remaining values at the tail of the source

|

||||

src.drop_remaining();

|

||||

// but prevent drop of the allocation itself once IntoIter goes out of scope

|

||||

src.forget_allocation();

|

||||

|

||||

let vec = unsafe {

|

||||

let len = dst.offset_from(dst_buf) as usize;

|

||||

Vec::from_raw_parts(dst_buf, len, cap)

|

||||

};

|

||||

|

||||

vec

|

||||

}

|

||||

}

|

||||

|

||||

impl<'a, T: 'a, I> SpecFromIter<&'a T, I> for Vec<T>

|

||||

where

|

||||

I: Iterator<Item = &'a T>,

|

||||

T: Clone,

|

||||

{

|

||||

default fn from_iter(iterator: I) -> Self {

|

||||

SpecFromIter::from_iter(iterator.cloned())

|

||||

}

|

||||

}

|

||||

|

||||

impl<'a, T: 'a> SpecFromIter<&'a T, slice::Iter<'a, T>> for Vec<T>

|

||||

where

|

||||

T: Copy,

|

||||

{

|

||||

// reuses the extend specialization for T: Copy

|

||||

fn from_iter(iterator: slice::Iter<'a, T>) -> Self {

|

||||

let mut vec = Vec::new();

|

||||

// must delegate to spec_extend() since extend() itself delegates

|

||||

// to spec_from for empty Vecs

|

||||

vec.spec_extend(iterator);

|

||||

vec

|

||||

}

|

||||

}

|

||||

|

||||

// Specialization trait used for Vec::extend

|

||||

trait SpecExtend<T, I> {

|

||||

fn spec_extend(&mut self, iter: I);

|

||||

}

|

||||

|

||||

impl<T, I> SpecExtend<T, I> for Vec<T>

|

||||

where

|

||||

I: Iterator<Item = T>,

|

||||

{

|

||||

default fn spec_extend(&mut self, iter: I) {

|

||||

self.extend_desugared(iter)

|

||||

}

|

||||

|

|

@ -2135,12 +2377,6 @@ impl<T, I> SpecExtend<T, I> for Vec<T>

|

|||

where

|

||||

I: TrustedLen<Item = T>,

|

||||

{

|

||||

default fn from_iter(iterator: I) -> Self {

|

||||

let mut vector = Vec::new();

|

||||

vector.spec_extend(iterator);

|

||||

vector

|

||||

}

|

||||

|

||||

default fn spec_extend(&mut self, iterator: I) {

|

||||

// This is the case for a TrustedLen iterator.

|

||||

let (low, high) = iterator.size_hint();

|

||||

|

|

@ -2171,22 +2407,6 @@ where

|

|||

}

|

||||

|

||||

impl<T> SpecExtend<T, IntoIter<T>> for Vec<T> {

|

||||

fn from_iter(iterator: IntoIter<T>) -> Self {

|

||||

// A common case is passing a vector into a function which immediately

|

||||

// re-collects into a vector. We can short circuit this if the IntoIter

|

||||

// has not been advanced at all.

|

||||

if iterator.buf.as_ptr() as *const _ == iterator.ptr {

|

||||

unsafe {

|

||||

let it = ManuallyDrop::new(iterator);

|

||||

Vec::from_raw_parts(it.buf.as_ptr(), it.len(), it.cap)

|

||||

}

|

||||

} else {

|

||||

let mut vector = Vec::new();

|

||||

vector.spec_extend(iterator);

|

||||

vector

|

||||

}

|

||||

}

|

||||

|

||||

fn spec_extend(&mut self, mut iterator: IntoIter<T>) {

|

||||

unsafe {

|

||||

self.append_elements(iterator.as_slice() as _);

|

||||

|

|

@ -2200,10 +2420,6 @@ where

|

|||

I: Iterator<Item = &'a T>,

|

||||

T: Clone,

|

||||

{

|

||||

default fn from_iter(iterator: I) -> Self {

|

||||

SpecExtend::from_iter(iterator.cloned())

|

||||

}

|

||||

|

||||

default fn spec_extend(&mut self, iterator: I) {

|

||||

self.spec_extend(iterator.cloned())

|

||||

}

|

||||

|

|

@ -2215,17 +2431,13 @@ where

|

|||

{

|

||||

fn spec_extend(&mut self, iterator: slice::Iter<'a, T>) {

|

||||

let slice = iterator.as_slice();

|

||||

self.reserve(slice.len());

|

||||

unsafe {

|

||||

let len = self.len();

|

||||

let dst_slice = slice::from_raw_parts_mut(self.as_mut_ptr().add(len), slice.len());

|

||||

dst_slice.copy_from_slice(slice);

|

||||

self.set_len(len + slice.len());

|

||||

}

|

||||

unsafe { self.append_elements(slice) };

|

||||

}

|

||||

}

|

||||

|

||||

impl<T> Vec<T> {

|

||||

// leaf method to which various SpecFrom/SpecExtend implementations delegate when

|

||||

// they have no further optimizations to apply

|

||||

fn extend_desugared<I: Iterator<Item = T>>(&mut self, mut iterator: I) {

|

||||

// This is the case for a general iterator.

|

||||

//

|

||||

|

|

@ -2656,6 +2868,24 @@ impl<T> IntoIter<T> {

|

|||

fn as_raw_mut_slice(&mut self) -> *mut [T] {

|

||||

ptr::slice_from_raw_parts_mut(self.ptr as *mut T, self.len())

|

||||

}

|

||||

|

||||

fn drop_remaining(&mut self) {

|

||||

if mem::needs_drop::<T>() {

|

||||

unsafe {

|

||||

ptr::drop_in_place(self.as_mut_slice());

|

||||

}

|

||||

}

|

||||

self.ptr = self.end;

|

||||

}

|

||||

|

||||

/// Relinquishes the backing allocation, equivalent to

|

||||

/// `ptr::write(&mut self, Vec::new().into_iter())`

|

||||

fn forget_allocation(&mut self) {

|

||||

self.cap = 0;

|

||||

self.buf = unsafe { NonNull::new_unchecked(RawVec::NEW.ptr()) };

|

||||

self.ptr = self.buf.as_ptr();

|

||||

self.end = self.buf.as_ptr();

|

||||

}

|

||||

}

|

||||

|

||||

#[stable(feature = "vec_intoiter_as_ref", since = "1.46.0")]

|

||||

|

|

@ -2712,6 +2942,17 @@ impl<T> Iterator for IntoIter<T> {

|

|||

fn count(self) -> usize {

|

||||

self.len()

|

||||

}

|

||||

|

||||

unsafe fn get_unchecked(&mut self, i: usize) -> Self::Item

|

||||

where

|

||||

Self: TrustedRandomAccess,

|

||||

{

|

||||

// SAFETY: the caller must uphold the contract for

|

||||

// `Iterator::get_unchecked`.

|

||||

unsafe {

|

||||

if mem::size_of::<T>() == 0 { mem::zeroed() } else { ptr::read(self.ptr.add(i)) }

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

#[stable(feature = "rust1", since = "1.0.0")]

|

||||

|

|

@ -2751,6 +2992,19 @@ impl<T> FusedIterator for IntoIter<T> {}

|

|||

#[unstable(feature = "trusted_len", issue = "37572")]

|

||||

unsafe impl<T> TrustedLen for IntoIter<T> {}

|

||||

|

||||

#[doc(hidden)]

|

||||

#[unstable(issue = "none", feature = "std_internals")]

|

||||

// T: Copy as approximation for !Drop since get_unchecked does not advance self.ptr

|

||||

// and thus we can't implement drop-handling

|

||||

unsafe impl<T> TrustedRandomAccess for IntoIter<T>

|

||||

where

|

||||

T: Copy,

|

||||

{

|

||||

fn may_have_side_effect() -> bool {

|

||||

false

|

||||

}

|

||||

}

|

||||

|

||||

#[stable(feature = "vec_into_iter_clone", since = "1.8.0")]

|

||||

impl<T: Clone> Clone for IntoIter<T> {

|

||||

fn clone(&self) -> IntoIter<T> {

|

||||

|

|

@ -2779,6 +3033,34 @@ unsafe impl<#[may_dangle] T> Drop for IntoIter<T> {

|

|||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<T> InPlaceIterable for IntoIter<T> {}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<T> SourceIter for IntoIter<T> {

|

||||

type Source = IntoIter<T>;

|

||||

|

||||

#[inline]

|

||||

unsafe fn as_inner(&mut self) -> &mut Self::Source {

|

||||

self

|

||||

}

|

||||

}

|

||||

|

||||

// internal helper trait for in-place iteration specialization.

|

||||

#[rustc_specialization_trait]

|

||||

pub(crate) trait AsIntoIter {

|

||||

type Item;

|

||||

fn as_into_iter(&mut self) -> &mut IntoIter<Self::Item>;

|

||||

}

|

||||

|

||||

impl<T> AsIntoIter for IntoIter<T> {

|

||||

type Item = T;

|

||||

|

||||

fn as_into_iter(&mut self) -> &mut IntoIter<Self::Item> {

|

||||

self

|

||||

}

|

||||

}

|

||||

|

||||

/// A draining iterator for `Vec<T>`.

|

||||

///

|

||||

/// This `struct` is created by [`Vec::drain`].

|

||||

|

|

@ -3042,7 +3324,7 @@ where

|

|||

old_len: usize,

|

||||

/// The filter test predicate.

|

||||

pred: F,

|

||||

/// A flag that indicates a panic has occurred in the filter test prodicate.

|

||||

/// A flag that indicates a panic has occurred in the filter test predicate.

|

||||

/// This is used as a hint in the drop implementation to prevent consumption

|

||||

/// of the remainder of the `DrainFilter`. Any unprocessed items will be

|

||||

/// backshifted in the `vec`, but no further items will be dropped or

|

||||

|

|

|

|||

|

|

@ -230,6 +230,18 @@ fn test_to_vec() {

|

|||

check_to_vec(vec![5, 4, 3, 2, 1, 5, 4, 3, 2, 1, 5, 4, 3, 2, 1]);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_in_place_iterator_specialization() {

|

||||

let src: Vec<usize> = vec![1, 2, 3];

|

||||

let src_ptr = src.as_ptr();

|

||||

let heap: BinaryHeap<_> = src.into_iter().map(std::convert::identity).collect();

|

||||

let heap_ptr = heap.iter().next().unwrap() as *const usize;

|

||||

assert_eq!(src_ptr, heap_ptr);

|

||||

let sink: Vec<_> = heap.into_iter().map(std::convert::identity).collect();

|

||||

let sink_ptr = sink.as_ptr();

|

||||

assert_eq!(heap_ptr, sink_ptr);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_empty_pop() {

|

||||

let mut heap = BinaryHeap::<i32>::new();

|

||||

|

|

|

|||

|

|

@ -14,6 +14,8 @@

|

|||

#![feature(slice_ptr_get)]

|

||||

#![feature(split_inclusive)]

|

||||

#![feature(binary_heap_retain)]

|

||||

#![feature(inplace_iteration)]

|

||||

#![feature(iter_map_while)]

|

||||

|

||||

use std::collections::hash_map::DefaultHasher;

|

||||

use std::hash::{Hash, Hasher};

|

||||

|

|

|

|||

|

|

@ -1459,6 +1459,15 @@ fn test_to_vec() {

|

|||

assert_eq!(ys, [1, 2, 3]);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_in_place_iterator_specialization() {

|

||||

let src: Box<[usize]> = box [1, 2, 3];

|

||||

let src_ptr = src.as_ptr();

|

||||

let sink: Box<_> = src.into_vec().into_iter().map(std::convert::identity).collect();

|

||||

let sink_ptr = sink.as_ptr();

|

||||

assert_eq!(src_ptr, sink_ptr);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_box_slice_clone() {

|

||||

let data = vec![vec![0, 1], vec![0], vec![1]];

|

||||

|

|

|

|||

|

|

@ -1,8 +1,10 @@

|

|||

use std::borrow::Cow;

|

||||

use std::collections::TryReserveError::*;

|

||||

use std::fmt::Debug;

|

||||

use std::iter::InPlaceIterable;

|

||||

use std::mem::size_of;

|

||||

use std::panic::{catch_unwind, AssertUnwindSafe};

|

||||

use std::rc::Rc;

|

||||

use std::vec::{Drain, IntoIter};

|

||||

|

||||

struct DropCounter<'a> {

|

||||

|

|

@ -775,6 +777,87 @@ fn test_into_iter_leak() {

|

|||

assert_eq!(unsafe { DROPS }, 3);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_from_iter_specialization() {

|

||||

let src: Vec<usize> = vec![0usize; 1];

|

||||

let srcptr = src.as_ptr();

|

||||

let sink = src.into_iter().collect::<Vec<_>>();

|

||||

let sinkptr = sink.as_ptr();

|

||||

assert_eq!(srcptr, sinkptr);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_from_iter_partially_drained_in_place_specialization() {

|

||||

let src: Vec<usize> = vec![0usize; 10];

|

||||

let srcptr = src.as_ptr();

|

||||

let mut iter = src.into_iter();

|

||||

iter.next();

|

||||

iter.next();

|

||||

let sink = iter.collect::<Vec<_>>();

|

||||

let sinkptr = sink.as_ptr();

|

||||

assert_eq!(srcptr, sinkptr);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_from_iter_specialization_with_iterator_adapters() {

|

||||

fn assert_in_place_trait<T: InPlaceIterable>(_: &T) {};

|

||||

let src: Vec<usize> = vec![0usize; 65535];

|

||||

let srcptr = src.as_ptr();

|

||||

let iter = src

|

||||

.into_iter()

|

||||

.enumerate()

|

||||

.map(|i| i.0 + i.1)

|

||||

.zip(std::iter::repeat(1usize))

|

||||

.map(|(a, b)| a + b)

|

||||

.map_while(Option::Some)

|

||||

.peekable()

|

||||

.skip(1)

|

||||

.map(|e| std::num::NonZeroUsize::new(e));

|

||||

assert_in_place_trait(&iter);

|

||||

let sink = iter.collect::<Vec<_>>();

|

||||

let sinkptr = sink.as_ptr();

|

||||

assert_eq!(srcptr, sinkptr as *const usize);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_from_iter_specialization_head_tail_drop() {

|

||||

let drop_count: Vec<_> = (0..=2).map(|_| Rc::new(())).collect();

|

||||

let src: Vec<_> = drop_count.iter().cloned().collect();

|

||||

let srcptr = src.as_ptr();

|

||||

let iter = src.into_iter();

|

||||

let sink: Vec<_> = iter.skip(1).take(1).collect();

|

||||

let sinkptr = sink.as_ptr();

|

||||

assert_eq!(srcptr, sinkptr, "specialization was applied");

|

||||

assert_eq!(Rc::strong_count(&drop_count[0]), 1, "front was dropped");

|

||||

assert_eq!(Rc::strong_count(&drop_count[1]), 2, "one element was collected");

|

||||

assert_eq!(Rc::strong_count(&drop_count[2]), 1, "tail was dropped");

|

||||

assert_eq!(sink.len(), 1);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_from_iter_specialization_panic_drop() {

|

||||

let drop_count: Vec<_> = (0..=2).map(|_| Rc::new(())).collect();

|

||||

let src: Vec<_> = drop_count.iter().cloned().collect();

|

||||

let iter = src.into_iter();

|

||||

|

||||

let _ = std::panic::catch_unwind(AssertUnwindSafe(|| {

|

||||

let _ = iter

|

||||

.enumerate()

|

||||

.filter_map(|(i, e)| {

|

||||

if i == 1 {

|

||||

std::panic!("aborting iteration");

|

||||

}

|

||||

Some(e)

|

||||

})

|

||||

.collect::<Vec<_>>();

|

||||

}));

|

||||

|

||||

assert!(

|

||||

drop_count.iter().map(Rc::strong_count).all(|count| count == 1),

|

||||

"all items were dropped once"

|

||||

);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_cow_from() {

|

||||

let borrowed: &[_] = &["borrowed", "(slice)"];

|

||||

|

|

|

|||

|

|

@ -1,5 +1,7 @@

|

|||

use super::InPlaceIterable;

|

||||

use crate::intrinsics;

|

||||

use crate::iter::adapters::zip::try_get_unchecked;

|

||||

use crate::iter::adapters::SourceIter;

|

||||

use crate::iter::TrustedRandomAccess;

|

||||

use crate::iter::{DoubleEndedIterator, ExactSizeIterator, FusedIterator, Iterator};

|

||||

use crate::ops::Try;

|

||||

|

|

@ -517,3 +519,24 @@ where

|

|||

unchecked!(self).is_empty()

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<S: Iterator, I: FusedIterator> SourceIter for Fuse<I>

|

||||

where

|

||||

I: SourceIter<Source = S>,

|

||||

{

|

||||

type Source = S;

|

||||

|

||||

#[inline]

|

||||

unsafe fn as_inner(&mut self) -> &mut S {

|

||||

match self.iter {

|

||||

// Safety: unsafe function forwarding to unsafe function with the same requirements

|

||||

Some(ref mut iter) => unsafe { SourceIter::as_inner(iter) },

|

||||

// SAFETY: the specialized iterator never sets `None`

|

||||

None => unsafe { intrinsics::unreachable() },

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<I: InPlaceIterable> InPlaceIterable for Fuse<I> {}

|

||||

|

|

|

|||

|

|

@ -4,7 +4,9 @@ use crate::intrinsics;

|

|||

use crate::ops::{Add, AddAssign, ControlFlow, Try};

|

||||

|

||||

use super::from_fn;

|

||||

use super::{DoubleEndedIterator, ExactSizeIterator, FusedIterator, Iterator, TrustedLen};

|

||||

use super::{

|

||||

DoubleEndedIterator, ExactSizeIterator, FusedIterator, InPlaceIterable, Iterator, TrustedLen,

|

||||

};

|

||||

|

||||

mod chain;

|

||||

mod flatten;

|

||||

|

|

@ -16,9 +18,77 @@ pub use self::chain::Chain;

|

|||

pub use self::flatten::{FlatMap, Flatten};

|

||||

pub use self::fuse::Fuse;

|

||||

use self::zip::try_get_unchecked;

|

||||

pub(crate) use self::zip::TrustedRandomAccess;

|

||||

#[unstable(feature = "trusted_random_access", issue = "none")]

|

||||

pub use self::zip::TrustedRandomAccess;

|

||||

pub use self::zip::Zip;

|

||||

|

||||

/// This trait provides transitive access to source-stage in an interator-adapter pipeline

|

||||

/// under the conditions that

|

||||

/// * the iterator source `S` itself implements `SourceIter<Source = S>`

|

||||

/// * there is a delegating implementation of this trait for each adapter in the pipeline between

|

||||

/// the source and the pipeline consumer.

|

||||

///

|

||||

/// When the source is an owning iterator struct (commonly called `IntoIter`) then

|

||||

/// this can be useful for specializing [`FromIterator`] implementations or recovering the

|

||||

/// remaining elements after an iterator has been partially exhausted.

|

||||

///

|

||||

/// Note that implementations do not necessarily have to provide access to the inner-most

|

||||

/// source of a pipeline. A stateful intermediate adapter might eagerly evaluate a part

|

||||

/// of the pipeline and expose its internal storage as source.

|

||||

///

|

||||

/// The trait is unsafe because implementers must uphold additional safety properties.

|

||||

/// See [`as_inner`] for details.

|

||||

///

|

||||

/// # Examples

|

||||

///

|

||||

/// Retrieving a partially consumed source:

|

||||

///

|

||||

/// ```

|

||||

/// # #![feature(inplace_iteration)]

|

||||

/// # use std::iter::SourceIter;

|

||||

///

|

||||

/// let mut iter = vec![9, 9, 9].into_iter().map(|i| i * i);

|

||||

/// let _ = iter.next();

|

||||

/// let mut remainder = std::mem::replace(unsafe { iter.as_inner() }, Vec::new().into_iter());

|

||||

/// println!("n = {} elements remaining", remainder.len());

|

||||

/// ```

|

||||

///

|

||||

/// [`FromIterator`]: crate::iter::FromIterator

|

||||

/// [`as_inner`]: SourceIter::as_inner

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

pub unsafe trait SourceIter {

|

||||

/// A source stage in an iterator pipeline.

|

||||

type Source: Iterator;

|

||||

|

||||

/// Retrieve the source of an iterator pipeline.

|

||||

///

|

||||

/// # Safety

|

||||

///

|

||||

/// Implementations of must return the same mutable reference for their lifetime, unless

|

||||

/// replaced by a caller.

|

||||

/// Callers may only replace the reference when they stopped iteration and drop the

|

||||

/// iterator pipeline after extracting the source.

|

||||

///

|

||||

/// This means iterator adapters can rely on the source not changing during

|

||||

/// iteration but they cannot rely on it in their Drop implementations.

|

||||

///

|

||||

/// Implementing this method means adapters relinquish private-only access to their

|

||||

/// source and can only rely on guarantees made based on method receiver types.

|

||||

/// The lack of restricted access also requires that adapters must uphold the source's

|

||||

/// public API even when they have access to its internals.

|

||||

///

|

||||

/// Callers in turn must expect the source to be in any state that is consistent with

|

||||

/// its public API since adapters sitting between it and the source have the same

|

||||

/// access. In particular an adapter may have consumed more elements than strictly necessary.

|

||||

///

|

||||

/// The overall goal of these requirements is to let the consumer of a pipeline use

|

||||

/// * whatever remains in the source after iteration has stopped

|

||||

/// * the memory that has become unused by advancing a consuming iterator

|

||||

///

|

||||

/// [`next()`]: trait.Iterator.html#method.next

|

||||

unsafe fn as_inner(&mut self) -> &mut Self::Source;

|

||||

}

|

||||

|

||||

/// A double-ended iterator with the direction inverted.

|

||||

///

|

||||

/// This `struct` is created by the [`rev`] method on [`Iterator`]. See its

|

||||

|

|

@ -939,6 +1009,24 @@ where

|

|||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<S: Iterator, B, I: Iterator, F> SourceIter for Map<I, F>

|

||||

where

|

||||

F: FnMut(I::Item) -> B,

|

||||

I: SourceIter<Source = S>,

|

||||

{

|

||||

type Source = S;

|

||||

|

||||

#[inline]

|

||||

unsafe fn as_inner(&mut self) -> &mut S {

|

||||

// Safety: unsafe function forwarding to unsafe function with the same requirements

|

||||

unsafe { SourceIter::as_inner(&mut self.iter) }

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<B, I: InPlaceIterable, F> InPlaceIterable for Map<I, F> where F: FnMut(I::Item) -> B {}

|

||||

|

||||

/// An iterator that filters the elements of `iter` with `predicate`.

|

||||

///

|

||||

/// This `struct` is created by the [`filter`] method on [`Iterator`]. See its

|

||||

|

|

@ -1070,6 +1158,24 @@ where

|

|||

#[stable(feature = "fused", since = "1.26.0")]

|

||||

impl<I: FusedIterator, P> FusedIterator for Filter<I, P> where P: FnMut(&I::Item) -> bool {}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<S: Iterator, P, I: Iterator> SourceIter for Filter<I, P>

|

||||

where

|

||||

P: FnMut(&I::Item) -> bool,

|

||||

I: SourceIter<Source = S>,

|

||||

{

|

||||

type Source = S;

|

||||

|

||||

#[inline]

|

||||

unsafe fn as_inner(&mut self) -> &mut S {

|

||||

// Safety: unsafe function forwarding to unsafe function with the same requirements

|

||||

unsafe { SourceIter::as_inner(&mut self.iter) }

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<I: InPlaceIterable, P> InPlaceIterable for Filter<I, P> where P: FnMut(&I::Item) -> bool {}

|

||||

|

||||

/// An iterator that uses `f` to both filter and map elements from `iter`.

|

||||

///

|

||||

/// This `struct` is created by the [`filter_map`] method on [`Iterator`]. See its

|

||||

|

|

@ -1196,6 +1302,27 @@ where

|

|||

#[stable(feature = "fused", since = "1.26.0")]

|

||||

impl<B, I: FusedIterator, F> FusedIterator for FilterMap<I, F> where F: FnMut(I::Item) -> Option<B> {}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<S: Iterator, B, I: Iterator, F> SourceIter for FilterMap<I, F>

|

||||

where

|

||||

F: FnMut(I::Item) -> Option<B>,

|

||||

I: SourceIter<Source = S>,

|

||||

{

|

||||

type Source = S;

|

||||

|

||||

#[inline]

|

||||

unsafe fn as_inner(&mut self) -> &mut S {

|

||||

// Safety: unsafe function forwarding to unsafe function with the same requirements

|

||||

unsafe { SourceIter::as_inner(&mut self.iter) }

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<B, I: InPlaceIterable, F> InPlaceIterable for FilterMap<I, F> where

|

||||

F: FnMut(I::Item) -> Option<B>

|

||||

{

|

||||

}

|

||||

|

||||

/// An iterator that yields the current count and the element during iteration.

|

||||

///

|

||||

/// This `struct` is created by the [`enumerate`] method on [`Iterator`]. See its

|

||||

|

|

@ -1414,6 +1541,23 @@ impl<I> FusedIterator for Enumerate<I> where I: FusedIterator {}

|

|||

#[unstable(feature = "trusted_len", issue = "37572")]

|

||||

unsafe impl<I> TrustedLen for Enumerate<I> where I: TrustedLen {}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<S: Iterator, I: Iterator> SourceIter for Enumerate<I>

|

||||

where

|

||||

I: SourceIter<Source = S>,

|

||||

{

|

||||

type Source = S;

|

||||

|

||||

#[inline]

|

||||

unsafe fn as_inner(&mut self) -> &mut S {

|

||||

// Safety: unsafe function forwarding to unsafe function with the same requirements

|

||||

unsafe { SourceIter::as_inner(&mut self.iter) }

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<I: InPlaceIterable> InPlaceIterable for Enumerate<I> {}

|

||||

|

||||

/// An iterator with a `peek()` that returns an optional reference to the next

|

||||

/// element.

|

||||

///

|

||||

|

|

@ -1692,6 +1836,26 @@ impl<I: Iterator> Peekable<I> {

|

|||

}

|

||||

}

|

||||

|

||||

#[unstable(feature = "trusted_len", issue = "37572")]

|

||||

unsafe impl<I> TrustedLen for Peekable<I> where I: TrustedLen {}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<S: Iterator, I: Iterator> SourceIter for Peekable<I>

|

||||

where

|

||||

I: SourceIter<Source = S>,

|

||||

{

|

||||

type Source = S;

|

||||

|

||||

#[inline]

|

||||

unsafe fn as_inner(&mut self) -> &mut S {

|

||||

// Safety: unsafe function forwarding to unsafe function with the same requirements

|

||||

unsafe { SourceIter::as_inner(&mut self.iter) }

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<I: InPlaceIterable> InPlaceIterable for Peekable<I> {}

|

||||

|

||||

/// An iterator that rejects elements while `predicate` returns `true`.

|

||||

///

|

||||

/// This `struct` is created by the [`skip_while`] method on [`Iterator`]. See its

|

||||

|

|

@ -1793,6 +1957,27 @@ where

|

|||

{

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<S: Iterator, P, I: Iterator> SourceIter for SkipWhile<I, P>

|

||||

where

|

||||

P: FnMut(&I::Item) -> bool,

|

||||

I: SourceIter<Source = S>,

|

||||

{

|

||||

type Source = S;

|

||||

|

||||

#[inline]

|

||||

unsafe fn as_inner(&mut self) -> &mut S {

|

||||

// Safety: unsafe function forwarding to unsafe function with the same requirements

|

||||

unsafe { SourceIter::as_inner(&mut self.iter) }

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<I: InPlaceIterable, F> InPlaceIterable for SkipWhile<I, F> where

|

||||

F: FnMut(&I::Item) -> bool

|

||||

{

|

||||

}

|

||||

|

||||

/// An iterator that only accepts elements while `predicate` returns `true`.

|

||||

///

|

||||

/// This `struct` is created by the [`take_while`] method on [`Iterator`]. See its

|

||||

|

|

@ -1907,6 +2092,27 @@ where

|

|||

{

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<S: Iterator, P, I: Iterator> SourceIter for TakeWhile<I, P>

|

||||

where

|

||||

P: FnMut(&I::Item) -> bool,

|

||||

I: SourceIter<Source = S>,

|

||||

{

|

||||

type Source = S;

|

||||

|

||||

#[inline]

|

||||

unsafe fn as_inner(&mut self) -> &mut S {

|

||||

// Safety: unsafe function forwarding to unsafe function with the same requirements

|

||||

unsafe { SourceIter::as_inner(&mut self.iter) }

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<I: InPlaceIterable, F> InPlaceIterable for TakeWhile<I, F> where

|

||||

F: FnMut(&I::Item) -> bool

|

||||

{

|

||||

}

|

||||

|

||||

/// An iterator that only accepts elements while `predicate` returns `Some(_)`.

|

||||

///

|

||||

/// This `struct` is created by the [`map_while`] method on [`Iterator`]. See its

|

||||

|

|

@ -1984,6 +2190,27 @@ where

|

|||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<S: Iterator, B, I: Iterator, P> SourceIter for MapWhile<I, P>

|

||||

where

|

||||

P: FnMut(I::Item) -> Option<B>,

|

||||

I: SourceIter<Source = S>,

|

||||

{

|

||||

type Source = S;

|

||||

|

||||

#[inline]

|

||||

unsafe fn as_inner(&mut self) -> &mut S {

|

||||

// Safety: unsafe function forwarding to unsafe function with the same requirements

|

||||

unsafe { SourceIter::as_inner(&mut self.iter) }

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<B, I: InPlaceIterable, P> InPlaceIterable for MapWhile<I, P> where

|

||||

P: FnMut(I::Item) -> Option<B>

|

||||

{

|

||||

}

|

||||

|

||||

/// An iterator that skips over `n` elements of `iter`.

|

||||

///

|

||||

/// This `struct` is created by the [`skip`] method on [`Iterator`]. See its

|

||||

|

|

@ -2167,6 +2394,23 @@ where

|

|||

#[stable(feature = "fused", since = "1.26.0")]

|

||||

impl<I> FusedIterator for Skip<I> where I: FusedIterator {}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<S: Iterator, I: Iterator> SourceIter for Skip<I>

|

||||

where

|

||||

I: SourceIter<Source = S>,

|

||||

{

|

||||

type Source = S;

|

||||

|

||||

#[inline]

|

||||

unsafe fn as_inner(&mut self) -> &mut S {

|

||||

// Safety: unsafe function forwarding to unsafe function with the same requirements

|

||||

unsafe { SourceIter::as_inner(&mut self.iter) }

|

||||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<I: InPlaceIterable> InPlaceIterable for Skip<I> {}

|

||||

|

||||

/// An iterator that only iterates over the first `n` iterations of `iter`.

|

||||

///

|

||||

/// This `struct` is created by the [`take`] method on [`Iterator`]. See its

|

||||

|

|

@ -2277,6 +2521,23 @@ where

|

|||

}

|

||||

}

|

||||

|

||||

#[unstable(issue = "none", feature = "inplace_iteration")]

|

||||

unsafe impl<S: Iterator, I: Iterator> SourceIter for Take<I>

|

||||

where

|

||||

I: SourceIter<Source = S>,

|

||||