Auto merge of #74091 - richkadel:llvm-coverage-map-gen-4, r=tmandry

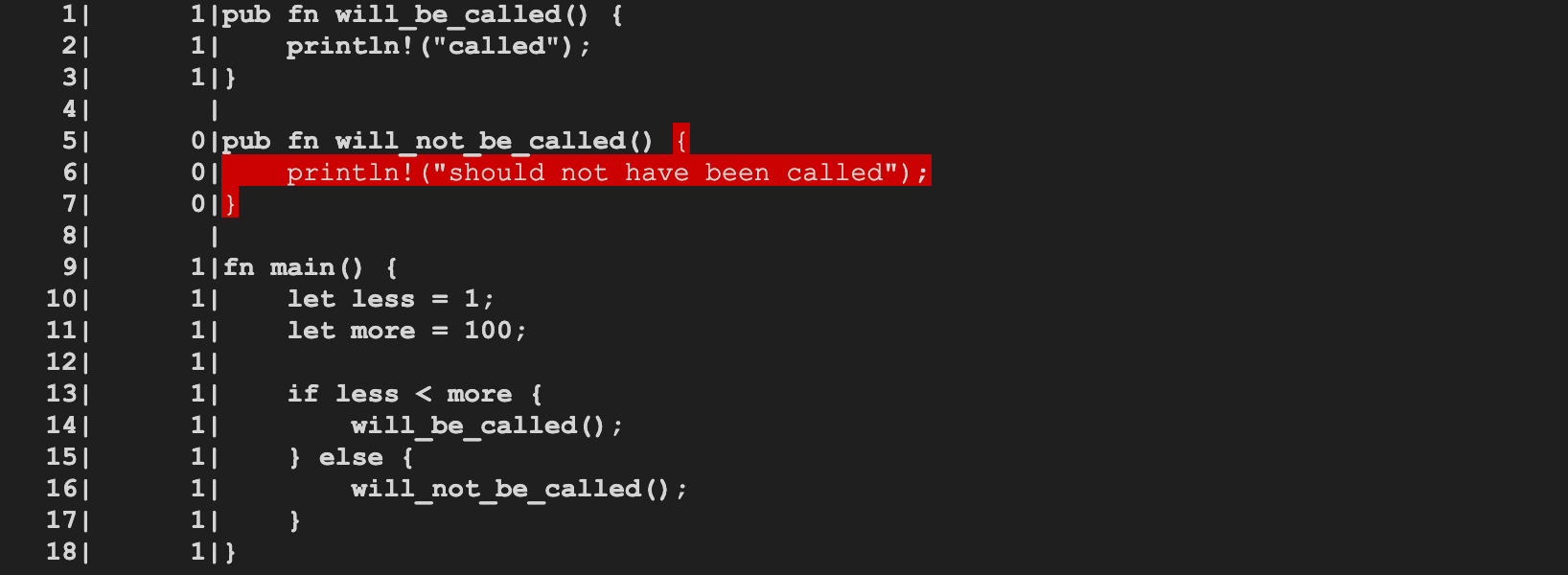

Generating the coverage map @tmandry @wesleywiser rustc now generates the coverage map and can support (limited) coverage report generation, at the function level. Example commands to generate a coverage report: ```shell $ BUILD=$HOME/rust/build/x86_64-unknown-linux-gnu $ $BUILD/stage1/bin/rustc -Zinstrument-coverage \ $HOME/rust/src/test/run-make-fulldeps/instrument-coverage/main.rs $ LLVM_PROFILE_FILE="main.profraw" ./main called $ $BUILD/llvm/bin/llvm-profdata merge -sparse main.profraw -o main.profdata $ $BUILD/llvm/bin/llvm-cov show --instr-profile=main.profdata main ```  r? @wesleywiser Rust compiler MCP rust-lang/compiler-team#278 Relevant issue: #34701 - Implement support for LLVMs code coverage instrumentation

This commit is contained in:

commit

47ea6d90b0

@ -2821,6 +2821,13 @@ dependencies = [

|

||||

"rls-span",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "rust-demangler"

|

||||

version = "0.0.0"

|

||||

dependencies = [

|

||||

"rustc-demangle",

|

||||

]

|

||||

|

||||

[[package]]

|

||||

name = "rustbook"

|

||||

version = "0.1.0"

|

||||

|

||||

@ -17,6 +17,7 @@ members = [

|

||||

"src/tools/remote-test-client",

|

||||

"src/tools/remote-test-server",

|

||||

"src/tools/rust-installer",

|

||||

"src/tools/rust-demangler",

|

||||

"src/tools/cargo",

|

||||

"src/tools/rustdoc",

|

||||

"src/tools/rls",

|

||||

|

||||

@ -370,6 +370,7 @@ impl<'a> Builder<'a> {

|

||||

tool::Cargo,

|

||||

tool::Rls,

|

||||

tool::RustAnalyzer,

|

||||

tool::RustDemangler,

|

||||

tool::Rustdoc,

|

||||

tool::Clippy,

|

||||

tool::CargoClippy,

|

||||

|

||||

@ -1022,6 +1022,10 @@ impl Step for Compiletest {

|

||||

cmd.arg("--rustdoc-path").arg(builder.rustdoc(compiler));

|

||||

}

|

||||

|

||||

if mode == "run-make" && suite.ends_with("fulldeps") {

|

||||

cmd.arg("--rust-demangler-path").arg(builder.tool_exe(Tool::RustDemangler));

|

||||

}

|

||||

|

||||

cmd.arg("--src-base").arg(builder.src.join("src/test").join(suite));

|

||||

cmd.arg("--build-base").arg(testdir(builder, compiler.host).join(suite));

|

||||

cmd.arg("--stage-id").arg(format!("stage{}-{}", compiler.stage, target));

|

||||

|

||||

@ -361,6 +361,7 @@ bootstrap_tool!(

|

||||

Compiletest, "src/tools/compiletest", "compiletest", is_unstable_tool = true;

|

||||

BuildManifest, "src/tools/build-manifest", "build-manifest";

|

||||

RemoteTestClient, "src/tools/remote-test-client", "remote-test-client";

|

||||

RustDemangler, "src/tools/rust-demangler", "rust-demangler";

|

||||

RustInstaller, "src/tools/rust-installer", "fabricate", is_external_tool = true;

|

||||

RustdocTheme, "src/tools/rustdoc-themes", "rustdoc-themes";

|

||||

ExpandYamlAnchors, "src/tools/expand-yaml-anchors", "expand-yaml-anchors";

|

||||

|

||||

@ -1958,8 +1958,14 @@ extern "rust-intrinsic" {

|

||||

/// Internal placeholder for injecting code coverage counters when the "instrument-coverage"

|

||||

/// option is enabled. The placeholder is replaced with `llvm.instrprof.increment` during code

|

||||

/// generation.

|

||||

#[cfg(not(bootstrap))]

|

||||

#[lang = "count_code_region"]

|

||||

pub fn count_code_region(index: u32, start_byte_pos: u32, end_byte_pos: u32);

|

||||

pub fn count_code_region(

|

||||

function_source_hash: u64,

|

||||

index: u32,

|

||||

start_byte_pos: u32,

|

||||

end_byte_pos: u32,

|

||||

);

|

||||

|

||||

/// Internal marker for code coverage expressions, injected into the MIR when the

|

||||

/// "instrument-coverage" option is enabled. This intrinsic is not converted into a

|

||||

@ -1967,6 +1973,8 @@ extern "rust-intrinsic" {

|

||||

/// "coverage map", which is injected into the generated code, as additional data.

|

||||

/// This marker identifies a code region and two other counters or counter expressions

|

||||

/// whose sum is the number of times the code region was executed.

|

||||

#[cfg(not(bootstrap))]

|

||||

#[lang = "coverage_counter_add"]

|

||||

pub fn coverage_counter_add(

|

||||

index: u32,

|

||||

left_index: u32,

|

||||

@ -1978,6 +1986,8 @@ extern "rust-intrinsic" {

|

||||

/// This marker identifies a code region and two other counters or counter expressions

|

||||

/// whose difference is the number of times the code region was executed.

|

||||

/// (See `coverage_counter_add` for more information.)

|

||||

#[cfg(not(bootstrap))]

|

||||

#[lang = "coverage_counter_subtract"]

|

||||

pub fn coverage_counter_subtract(

|

||||

index: u32,

|

||||

left_index: u32,

|

||||

|

||||

@ -133,6 +133,9 @@ fn set_probestack(cx: &CodegenCx<'ll, '_>, llfn: &'ll Value) {

|

||||

return;

|

||||

}

|

||||

|

||||

// FIXME(richkadel): Make sure probestack plays nice with `-Z instrument-coverage`

|

||||

// or disable it if not, similar to above early exits.

|

||||

|

||||

// Flag our internal `__rust_probestack` function as the stack probe symbol.

|

||||

// This is defined in the `compiler-builtins` crate for each architecture.

|

||||

llvm::AddFunctionAttrStringValue(

|

||||

|

||||

@ -144,17 +144,18 @@ pub fn compile_codegen_unit(

|

||||

}

|

||||

}

|

||||

|

||||

// Finalize code coverage by injecting the coverage map. Note, the coverage map will

|

||||

// also be added to the `llvm.used` variable, created next.

|

||||

if cx.sess().opts.debugging_opts.instrument_coverage {

|

||||

cx.coverageinfo_finalize();

|

||||

}

|

||||

|

||||

// Create the llvm.used variable

|

||||

// This variable has type [N x i8*] and is stored in the llvm.metadata section

|

||||

if !cx.used_statics().borrow().is_empty() {

|

||||

cx.create_used_variable()

|

||||

}

|

||||

|

||||

// Finalize code coverage by injecting the coverage map

|

||||

if cx.sess().opts.debugging_opts.instrument_coverage {

|

||||

cx.coverageinfo_finalize();

|

||||

}

|

||||

|

||||

// Finalize debuginfo

|

||||

if cx.sess().opts.debuginfo != DebugInfo::None {

|

||||

cx.debuginfo_finalize();

|

||||

|

||||

@ -1060,7 +1060,7 @@ impl BuilderMethods<'a, 'tcx> for Builder<'a, 'll, 'tcx> {

|

||||

fn_name, hash, num_counters, index

|

||||

);

|

||||

|

||||

let llfn = unsafe { llvm::LLVMRustGetInstrprofIncrementIntrinsic(self.cx().llmod) };

|

||||

let llfn = unsafe { llvm::LLVMRustGetInstrProfIncrementIntrinsic(self.cx().llmod) };

|

||||

let args = &[fn_name, hash, num_counters, index];

|

||||

let args = self.check_call("call", llfn, args);

|

||||

|

||||

|

||||

@ -493,10 +493,14 @@ impl StaticMethods for CodegenCx<'ll, 'tcx> {

|

||||

}

|

||||

|

||||

if attrs.flags.contains(CodegenFnAttrFlags::USED) {

|

||||

// This static will be stored in the llvm.used variable which is an array of i8*

|

||||

let cast = llvm::LLVMConstPointerCast(g, self.type_i8p());

|

||||

self.used_statics.borrow_mut().push(cast);

|

||||

self.add_used_global(g);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

/// Add a global value to a list to be stored in the `llvm.used` variable, an array of i8*.

|

||||

fn add_used_global(&self, global: &'ll Value) {

|

||||

let cast = unsafe { llvm::LLVMConstPointerCast(global, self.type_i8p()) };

|

||||

self.used_statics.borrow_mut().push(cast);

|

||||

}

|

||||

}

|

||||

|

||||

274

src/librustc_codegen_llvm/coverageinfo/mapgen.rs

Normal file

274

src/librustc_codegen_llvm/coverageinfo/mapgen.rs

Normal file

@ -0,0 +1,274 @@

|

||||

use crate::llvm;

|

||||

|

||||

use crate::common::CodegenCx;

|

||||

use crate::coverageinfo;

|

||||

|

||||

use log::debug;

|

||||

use rustc_codegen_ssa::coverageinfo::map::*;

|

||||

use rustc_codegen_ssa::traits::{BaseTypeMethods, ConstMethods, MiscMethods};

|

||||

use rustc_data_structures::fx::FxHashMap;

|

||||

use rustc_llvm::RustString;

|

||||

use rustc_middle::ty::Instance;

|

||||

use rustc_middle::{bug, mir};

|

||||

|

||||

use std::collections::BTreeMap;

|

||||

use std::ffi::CString;

|

||||

use std::path::PathBuf;

|

||||

|

||||

// FIXME(richkadel): Complete all variations of generating and exporting the coverage map to LLVM.

|

||||

// The current implementation is an initial foundation with basic capabilities (Counters, but not

|

||||

// CounterExpressions, etc.).

|

||||

|

||||

/// Generates and exports the Coverage Map.

|

||||

///

|

||||

/// This Coverage Map complies with Coverage Mapping Format version 3 (zero-based encoded as 2),

|

||||

/// as defined at [LLVM Code Coverage Mapping Format](https://github.com/rust-lang/llvm-project/blob/llvmorg-8.0.0/llvm/docs/CoverageMappingFormat.rst#llvm-code-coverage-mapping-format)

|

||||

/// and published in Rust's current (July 2020) fork of LLVM. This version is supported by the

|

||||

/// LLVM coverage tools (`llvm-profdata` and `llvm-cov`) bundled with Rust's fork of LLVM.

|

||||

///

|

||||

/// Consequently, Rust's bundled version of Clang also generates Coverage Maps compliant with

|

||||

/// version 3. Clang's implementation of Coverage Map generation was referenced when implementing

|

||||

/// this Rust version, and though the format documentation is very explicit and detailed, some

|

||||

/// undocumented details in Clang's implementation (that may or may not be important) were also

|

||||

/// replicated for Rust's Coverage Map.

|

||||

pub fn finalize<'ll, 'tcx>(cx: &CodegenCx<'ll, 'tcx>) {

|

||||

let mut coverage_writer = CoverageMappingWriter::new(cx);

|

||||

|

||||

let function_coverage_map = cx.coverage_context().take_function_coverage_map();

|

||||

|

||||

// Encode coverage mappings and generate function records

|

||||

let mut function_records = Vec::<&'ll llvm::Value>::new();

|

||||

let coverage_mappings_buffer = llvm::build_byte_buffer(|coverage_mappings_buffer| {

|

||||

for (instance, function_coverage) in function_coverage_map.into_iter() {

|

||||

if let Some(function_record) = coverage_writer.write_function_mappings_and_record(

|

||||

instance,

|

||||

function_coverage,

|

||||

coverage_mappings_buffer,

|

||||

) {

|

||||

function_records.push(function_record);

|

||||

}

|

||||

}

|

||||

});

|

||||

|

||||

// Encode all filenames covered in this module, ordered by `file_id`

|

||||

let filenames_buffer = llvm::build_byte_buffer(|filenames_buffer| {

|

||||

coverageinfo::write_filenames_section_to_buffer(

|

||||

&coverage_writer.filenames,

|

||||

filenames_buffer,

|

||||

);

|

||||

});

|

||||

|

||||

if coverage_mappings_buffer.len() > 0 {

|

||||

// Generate the LLVM IR representation of the coverage map and store it in a well-known

|

||||

// global constant.

|

||||

coverage_writer.write_coverage_map(

|

||||

function_records,

|

||||

filenames_buffer,

|

||||

coverage_mappings_buffer,

|

||||

);

|

||||

}

|

||||

}

|

||||

|

||||

struct CoverageMappingWriter<'a, 'll, 'tcx> {

|

||||

cx: &'a CodegenCx<'ll, 'tcx>,

|

||||

filenames: Vec<CString>,

|

||||

filename_to_index: FxHashMap<CString, u32>,

|

||||

}

|

||||

|

||||

impl<'a, 'll, 'tcx> CoverageMappingWriter<'a, 'll, 'tcx> {

|

||||

fn new(cx: &'a CodegenCx<'ll, 'tcx>) -> Self {

|

||||

Self { cx, filenames: Vec::new(), filename_to_index: FxHashMap::<CString, u32>::default() }

|

||||

}

|

||||

|

||||

/// For the given function, get the coverage region data, stream it to the given buffer, and

|

||||

/// then generate and return a new function record.

|

||||

fn write_function_mappings_and_record(

|

||||

&mut self,

|

||||

instance: Instance<'tcx>,

|

||||

mut function_coverage: FunctionCoverage,

|

||||

coverage_mappings_buffer: &RustString,

|

||||

) -> Option<&'ll llvm::Value> {

|

||||

let cx = self.cx;

|

||||

let coverageinfo: &mir::CoverageInfo = cx.tcx.coverageinfo(instance.def_id());

|

||||

debug!(

|

||||

"Generate coverage map for: {:?}, num_counters: {}, num_expressions: {}",

|

||||

instance, coverageinfo.num_counters, coverageinfo.num_expressions

|

||||

);

|

||||

debug_assert!(coverageinfo.num_counters > 0);

|

||||

|

||||

let regions_in_file_order = function_coverage.regions_in_file_order(cx.sess().source_map());

|

||||

if regions_in_file_order.len() == 0 {

|

||||

return None;

|

||||

}

|

||||

|

||||

// Stream the coverage mapping regions for the function (`instance`) to the buffer, and

|

||||

// compute the data byte size used.

|

||||

let old_len = coverage_mappings_buffer.len();

|

||||

self.regions_to_mappings(regions_in_file_order, coverage_mappings_buffer);

|

||||

let mapping_data_size = coverage_mappings_buffer.len() - old_len;

|

||||

debug_assert!(mapping_data_size > 0);

|

||||

|

||||

let mangled_function_name = cx.tcx.symbol_name(instance).to_string();

|

||||

let name_ref = coverageinfo::compute_hash(&mangled_function_name);

|

||||

let function_source_hash = function_coverage.source_hash();

|

||||

|

||||

// Generate and return the function record

|

||||

let name_ref_val = cx.const_u64(name_ref);

|

||||

let mapping_data_size_val = cx.const_u32(mapping_data_size as u32);

|

||||

let func_hash_val = cx.const_u64(function_source_hash);

|

||||

Some(cx.const_struct(

|

||||

&[name_ref_val, mapping_data_size_val, func_hash_val],

|

||||

/*packed=*/ true,

|

||||

))

|

||||

}

|

||||

|

||||

/// For each coverage region, extract its coverage data from the earlier coverage analysis.

|

||||

/// Use LLVM APIs to convert the data into buffered bytes compliant with the LLVM Coverage

|

||||

/// Mapping format.

|

||||

fn regions_to_mappings(

|

||||

&mut self,

|

||||

regions_in_file_order: BTreeMap<PathBuf, BTreeMap<CoverageLoc, (usize, CoverageKind)>>,

|

||||

coverage_mappings_buffer: &RustString,

|

||||

) {

|

||||

let mut virtual_file_mapping = Vec::new();

|

||||

let mut mapping_regions = coverageinfo::SmallVectorCounterMappingRegion::new();

|

||||

let mut expressions = coverageinfo::SmallVectorCounterExpression::new();

|

||||

|

||||

for (file_id, (file_path, file_coverage_regions)) in

|

||||

regions_in_file_order.into_iter().enumerate()

|

||||

{

|

||||

let file_id = file_id as u32;

|

||||

let filename = CString::new(file_path.to_string_lossy().to_string())

|

||||

.expect("null error converting filename to C string");

|

||||

debug!(" file_id: {} = '{:?}'", file_id, filename);

|

||||

let filenames_index = match self.filename_to_index.get(&filename) {

|

||||

Some(index) => *index,

|

||||

None => {

|

||||

let index = self.filenames.len() as u32;

|

||||

self.filenames.push(filename.clone());

|

||||

self.filename_to_index.insert(filename, index);

|

||||

index

|

||||

}

|

||||

};

|

||||

virtual_file_mapping.push(filenames_index);

|

||||

|

||||

let mut mapping_indexes = vec![0 as u32; file_coverage_regions.len()];

|

||||

for (mapping_index, (region_id, _)) in file_coverage_regions.values().enumerate() {

|

||||

mapping_indexes[*region_id] = mapping_index as u32;

|

||||

}

|

||||

|

||||

for (region_loc, (region_id, region_kind)) in file_coverage_regions.into_iter() {

|

||||

let mapping_index = mapping_indexes[region_id];

|

||||

match region_kind {

|

||||

CoverageKind::Counter => {

|

||||

debug!(

|

||||

" Counter {}, file_id: {}, region_loc: {}",

|

||||

mapping_index, file_id, region_loc

|

||||

);

|

||||

mapping_regions.push_from(

|

||||

mapping_index,

|

||||

file_id,

|

||||

region_loc.start_line,

|

||||

region_loc.start_col,

|

||||

region_loc.end_line,

|

||||

region_loc.end_col,

|

||||

);

|

||||

}

|

||||

CoverageKind::CounterExpression(lhs, op, rhs) => {

|

||||

debug!(

|

||||

" CounterExpression {} = {} {:?} {}, file_id: {}, region_loc: {:?}",

|

||||

mapping_index, lhs, op, rhs, file_id, region_loc,

|

||||

);

|

||||

mapping_regions.push_from(

|

||||

mapping_index,

|

||||

file_id,

|

||||

region_loc.start_line,

|

||||

region_loc.start_col,

|

||||

region_loc.end_line,

|

||||

region_loc.end_col,

|

||||

);

|

||||

expressions.push_from(op, lhs, rhs);

|

||||

}

|

||||

CoverageKind::Unreachable => {

|

||||

debug!(

|

||||

" Unreachable region, file_id: {}, region_loc: {:?}",

|

||||

file_id, region_loc,

|

||||

);

|

||||

bug!("Unreachable region not expected and not yet handled!")

|

||||

// FIXME(richkadel): implement and call

|

||||

// mapping_regions.push_from(...) for unreachable regions

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

// Encode and append the current function's coverage mapping data

|

||||

coverageinfo::write_mapping_to_buffer(

|

||||

virtual_file_mapping,

|

||||

expressions,

|

||||

mapping_regions,

|

||||

coverage_mappings_buffer,

|

||||

);

|

||||

}

|

||||

|

||||

fn write_coverage_map(

|

||||

self,

|

||||

function_records: Vec<&'ll llvm::Value>,

|

||||

filenames_buffer: Vec<u8>,

|

||||

mut coverage_mappings_buffer: Vec<u8>,

|

||||

) {

|

||||

let cx = self.cx;

|

||||

|

||||

// Concatenate the encoded filenames and encoded coverage mappings, and add additional zero

|

||||

// bytes as-needed to ensure 8-byte alignment.

|

||||

let mut coverage_size = coverage_mappings_buffer.len();

|

||||

let filenames_size = filenames_buffer.len();

|

||||

let remaining_bytes =

|

||||

(filenames_size + coverage_size) % coverageinfo::COVMAP_VAR_ALIGN_BYTES;

|

||||

if remaining_bytes > 0 {

|

||||

let pad = coverageinfo::COVMAP_VAR_ALIGN_BYTES - remaining_bytes;

|

||||

coverage_mappings_buffer.append(&mut [0].repeat(pad));

|

||||

coverage_size += pad;

|

||||

}

|

||||

let filenames_and_coverage_mappings = [filenames_buffer, coverage_mappings_buffer].concat();

|

||||

let filenames_and_coverage_mappings_val =

|

||||

cx.const_bytes(&filenames_and_coverage_mappings[..]);

|

||||

|

||||

debug!(

|

||||

"cov map: n_records = {}, filenames_size = {}, coverage_size = {}, 0-based version = {}",

|

||||

function_records.len(),

|

||||

filenames_size,

|

||||

coverage_size,

|

||||

coverageinfo::mapping_version()

|

||||

);

|

||||

|

||||

// Create the coverage data header

|

||||

let n_records_val = cx.const_u32(function_records.len() as u32);

|

||||

let filenames_size_val = cx.const_u32(filenames_size as u32);

|

||||

let coverage_size_val = cx.const_u32(coverage_size as u32);

|

||||

let version_val = cx.const_u32(coverageinfo::mapping_version());

|

||||

let cov_data_header_val = cx.const_struct(

|

||||

&[n_records_val, filenames_size_val, coverage_size_val, version_val],

|

||||

/*packed=*/ false,

|

||||

);

|

||||

|

||||

// Create the function records array

|

||||

let name_ref_from_u64 = cx.type_i64();

|

||||

let mapping_data_size_from_u32 = cx.type_i32();

|

||||

let func_hash_from_u64 = cx.type_i64();

|

||||

let function_record_ty = cx.type_struct(

|

||||

&[name_ref_from_u64, mapping_data_size_from_u32, func_hash_from_u64],

|

||||

/*packed=*/ true,

|

||||

);

|

||||

let function_records_val = cx.const_array(function_record_ty, &function_records[..]);

|

||||

|

||||

// Create the complete LLVM coverage data value to add to the LLVM IR

|

||||

let cov_data_val = cx.const_struct(

|

||||

&[cov_data_header_val, function_records_val, filenames_and_coverage_mappings_val],

|

||||

/*packed=*/ false,

|

||||

);

|

||||

|

||||

// Save the coverage data value to LLVM IR

|

||||

coverageinfo::save_map_to_mod(cx, cov_data_val);

|

||||

}

|

||||

}

|

||||

@ -1,67 +1,44 @@

|

||||

use crate::llvm;

|

||||

|

||||

use crate::builder::Builder;

|

||||

use crate::common::CodegenCx;

|

||||

|

||||

use libc::c_uint;

|

||||

use log::debug;

|

||||

use rustc_codegen_ssa::coverageinfo::map::*;

|

||||

use rustc_codegen_ssa::traits::{CoverageInfoBuilderMethods, CoverageInfoMethods};

|

||||

use rustc_codegen_ssa::traits::{

|

||||

BaseTypeMethods, CoverageInfoBuilderMethods, CoverageInfoMethods, StaticMethods,

|

||||

};

|

||||

use rustc_data_structures::fx::FxHashMap;

|

||||

use rustc_llvm::RustString;

|

||||

use rustc_middle::ty::Instance;

|

||||

|

||||

use std::cell::RefCell;

|

||||

use std::ffi::CString;

|

||||

|

||||

pub mod mapgen;

|

||||

|

||||

const COVMAP_VAR_ALIGN_BYTES: usize = 8;

|

||||

|

||||

/// A context object for maintaining all state needed by the coverageinfo module.

|

||||

pub struct CrateCoverageContext<'tcx> {

|

||||

// Coverage region data for each instrumented function identified by DefId.

|

||||

pub(crate) coverage_regions: RefCell<FxHashMap<Instance<'tcx>, FunctionCoverageRegions>>,

|

||||

pub(crate) function_coverage_map: RefCell<FxHashMap<Instance<'tcx>, FunctionCoverage>>,

|

||||

}

|

||||

|

||||

impl<'tcx> CrateCoverageContext<'tcx> {

|

||||

pub fn new() -> Self {

|

||||

Self { coverage_regions: Default::default() }

|

||||

Self { function_coverage_map: Default::default() }

|

||||

}

|

||||

}

|

||||

|

||||

/// Generates and exports the Coverage Map.

|

||||

// FIXME(richkadel): Actually generate and export the coverage map to LLVM.

|

||||

// The current implementation is actually just debug messages to show the data is available.

|

||||

pub fn finalize(cx: &CodegenCx<'_, '_>) {

|

||||

let coverage_regions = &*cx.coverage_context().coverage_regions.borrow();

|

||||

for instance in coverage_regions.keys() {

|

||||

let coverageinfo = cx.tcx.coverageinfo(instance.def_id());

|

||||

debug_assert!(coverageinfo.num_counters > 0);

|

||||

debug!(

|

||||

"Generate coverage map for: {:?}, hash: {}, num_counters: {}",

|

||||

instance, coverageinfo.hash, coverageinfo.num_counters

|

||||

);

|

||||

let function_coverage_regions = &coverage_regions[instance];

|

||||

for (index, region) in function_coverage_regions.indexed_regions() {

|

||||

match region.kind {

|

||||

CoverageKind::Counter => debug!(

|

||||

" Counter {}, for {}..{}",

|

||||

index, region.coverage_span.start_byte_pos, region.coverage_span.end_byte_pos

|

||||

),

|

||||

CoverageKind::CounterExpression(lhs, op, rhs) => debug!(

|

||||

" CounterExpression {} = {} {:?} {}, for {}..{}",

|

||||

index,

|

||||

lhs,

|

||||

op,

|

||||

rhs,

|

||||

region.coverage_span.start_byte_pos,

|

||||

region.coverage_span.end_byte_pos

|

||||

),

|

||||

}

|

||||

}

|

||||

for unreachable in function_coverage_regions.unreachable_regions() {

|

||||

debug!(

|

||||

" Unreachable code region: {}..{}",

|

||||

unreachable.start_byte_pos, unreachable.end_byte_pos

|

||||

);

|

||||

}

|

||||

pub fn take_function_coverage_map(&self) -> FxHashMap<Instance<'tcx>, FunctionCoverage> {

|

||||

self.function_coverage_map.replace(FxHashMap::default())

|

||||

}

|

||||

}

|

||||

|

||||

impl CoverageInfoMethods for CodegenCx<'ll, 'tcx> {

|

||||

fn coverageinfo_finalize(&self) {

|

||||

finalize(self)

|

||||

mapgen::finalize(self)

|

||||

}

|

||||

}

|

||||

|

||||

@ -69,20 +46,22 @@ impl CoverageInfoBuilderMethods<'tcx> for Builder<'a, 'll, 'tcx> {

|

||||

fn add_counter_region(

|

||||

&mut self,

|

||||

instance: Instance<'tcx>,

|

||||

function_source_hash: u64,

|

||||

index: u32,

|

||||

start_byte_pos: u32,

|

||||

end_byte_pos: u32,

|

||||

) {

|

||||

debug!(

|

||||

"adding counter to coverage map: instance={:?}, index={}, byte range {}..{}",

|

||||

instance, index, start_byte_pos, end_byte_pos,

|

||||

);

|

||||

let mut coverage_regions = self.coverage_context().coverage_regions.borrow_mut();

|

||||

coverage_regions.entry(instance).or_default().add_counter(

|

||||

index,

|

||||

start_byte_pos,

|

||||

end_byte_pos,

|

||||

"adding counter to coverage_regions: instance={:?}, function_source_hash={}, index={}, byte range {}..{}",

|

||||

instance, function_source_hash, index, start_byte_pos, end_byte_pos,

|

||||

);

|

||||

let mut coverage_regions = self.coverage_context().function_coverage_map.borrow_mut();

|

||||

coverage_regions

|

||||

.entry(instance)

|

||||

.or_insert_with(|| {

|

||||

FunctionCoverage::with_coverageinfo(self.tcx.coverageinfo(instance.def_id()))

|

||||

})

|

||||

.add_counter(function_source_hash, index, start_byte_pos, end_byte_pos);

|

||||

}

|

||||

|

||||

fn add_counter_expression_region(

|

||||

@ -96,18 +75,16 @@ impl CoverageInfoBuilderMethods<'tcx> for Builder<'a, 'll, 'tcx> {

|

||||

end_byte_pos: u32,

|

||||

) {

|

||||

debug!(

|

||||

"adding counter expression to coverage map: instance={:?}, index={}, {} {:?} {}, byte range {}..{}",

|

||||

"adding counter expression to coverage_regions: instance={:?}, index={}, {} {:?} {}, byte range {}..{}",

|

||||

instance, index, lhs, op, rhs, start_byte_pos, end_byte_pos,

|

||||

);

|

||||

let mut coverage_regions = self.coverage_context().coverage_regions.borrow_mut();

|

||||

coverage_regions.entry(instance).or_default().add_counter_expression(

|

||||

index,

|

||||

lhs,

|

||||

op,

|

||||

rhs,

|

||||

start_byte_pos,

|

||||

end_byte_pos,

|

||||

);

|

||||

let mut coverage_regions = self.coverage_context().function_coverage_map.borrow_mut();

|

||||

coverage_regions

|

||||

.entry(instance)

|

||||

.or_insert_with(|| {

|

||||

FunctionCoverage::with_coverageinfo(self.tcx.coverageinfo(instance.def_id()))

|

||||

})

|

||||

.add_counter_expression(index, lhs, op, rhs, start_byte_pos, end_byte_pos);

|

||||

}

|

||||

|

||||

fn add_unreachable_region(

|

||||

@ -117,10 +94,175 @@ impl CoverageInfoBuilderMethods<'tcx> for Builder<'a, 'll, 'tcx> {

|

||||

end_byte_pos: u32,

|

||||

) {

|

||||

debug!(

|

||||

"adding unreachable code to coverage map: instance={:?}, byte range {}..{}",

|

||||

"adding unreachable code to coverage_regions: instance={:?}, byte range {}..{}",

|

||||

instance, start_byte_pos, end_byte_pos,

|

||||

);

|

||||

let mut coverage_regions = self.coverage_context().coverage_regions.borrow_mut();

|

||||

coverage_regions.entry(instance).or_default().add_unreachable(start_byte_pos, end_byte_pos);

|

||||

let mut coverage_regions = self.coverage_context().function_coverage_map.borrow_mut();

|

||||

coverage_regions

|

||||

.entry(instance)

|

||||

.or_insert_with(|| {

|

||||

FunctionCoverage::with_coverageinfo(self.tcx.coverageinfo(instance.def_id()))

|

||||

})

|

||||

.add_unreachable(start_byte_pos, end_byte_pos);

|

||||

}

|

||||

}

|

||||

|

||||

/// This struct wraps an opaque reference to the C++ template instantiation of

|

||||

/// `llvm::SmallVector<coverage::CounterExpression>`. Each `coverage::CounterExpression` object is

|

||||

/// constructed from primative-typed arguments, and pushed to the `SmallVector`, in the C++

|

||||

/// implementation of `LLVMRustCoverageSmallVectorCounterExpressionAdd()` (see

|

||||

/// `src/rustllvm/CoverageMappingWrapper.cpp`).

|

||||

pub struct SmallVectorCounterExpression<'a> {

|

||||

pub raw: &'a mut llvm::coverageinfo::SmallVectorCounterExpression<'a>,

|

||||

}

|

||||

|

||||

impl SmallVectorCounterExpression<'a> {

|

||||

pub fn new() -> Self {

|

||||

SmallVectorCounterExpression {

|

||||

raw: unsafe { llvm::LLVMRustCoverageSmallVectorCounterExpressionCreate() },

|

||||

}

|

||||

}

|

||||

|

||||

pub fn as_ptr(&self) -> *const llvm::coverageinfo::SmallVectorCounterExpression<'a> {

|

||||

self.raw

|

||||

}

|

||||

|

||||

pub fn push_from(

|

||||

&mut self,

|

||||

kind: rustc_codegen_ssa::coverageinfo::CounterOp,

|

||||

left_index: u32,

|

||||

right_index: u32,

|

||||

) {

|

||||

unsafe {

|

||||

llvm::LLVMRustCoverageSmallVectorCounterExpressionAdd(

|

||||

&mut *(self.raw as *mut _),

|

||||

kind,

|

||||

left_index,

|

||||

right_index,

|

||||

)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl Drop for SmallVectorCounterExpression<'a> {

|

||||

fn drop(&mut self) {

|

||||

unsafe {

|

||||

llvm::LLVMRustCoverageSmallVectorCounterExpressionDispose(&mut *(self.raw as *mut _));

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

/// This struct wraps an opaque reference to the C++ template instantiation of

|

||||

/// `llvm::SmallVector<coverage::CounterMappingRegion>`. Each `coverage::CounterMappingRegion`

|

||||

/// object is constructed from primative-typed arguments, and pushed to the `SmallVector`, in the

|

||||

/// C++ implementation of `LLVMRustCoverageSmallVectorCounterMappingRegionAdd()` (see

|

||||

/// `src/rustllvm/CoverageMappingWrapper.cpp`).

|

||||

pub struct SmallVectorCounterMappingRegion<'a> {

|

||||

pub raw: &'a mut llvm::coverageinfo::SmallVectorCounterMappingRegion<'a>,

|

||||

}

|

||||

|

||||

impl SmallVectorCounterMappingRegion<'a> {

|

||||

pub fn new() -> Self {

|

||||

SmallVectorCounterMappingRegion {

|

||||

raw: unsafe { llvm::LLVMRustCoverageSmallVectorCounterMappingRegionCreate() },

|

||||

}

|

||||

}

|

||||

|

||||

pub fn as_ptr(&self) -> *const llvm::coverageinfo::SmallVectorCounterMappingRegion<'a> {

|

||||

self.raw

|

||||

}

|

||||

|

||||

pub fn push_from(

|

||||

&mut self,

|

||||

index: u32,

|

||||

file_id: u32,

|

||||

line_start: u32,

|

||||

column_start: u32,

|

||||

line_end: u32,

|

||||

column_end: u32,

|

||||

) {

|

||||

unsafe {

|

||||

llvm::LLVMRustCoverageSmallVectorCounterMappingRegionAdd(

|

||||

&mut *(self.raw as *mut _),

|

||||

index,

|

||||

file_id,

|

||||

line_start,

|

||||

column_start,

|

||||

line_end,

|

||||

column_end,

|

||||

)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl Drop for SmallVectorCounterMappingRegion<'a> {

|

||||

fn drop(&mut self) {

|

||||

unsafe {

|

||||

llvm::LLVMRustCoverageSmallVectorCounterMappingRegionDispose(

|

||||

&mut *(self.raw as *mut _),

|

||||

);

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

pub(crate) fn write_filenames_section_to_buffer(filenames: &Vec<CString>, buffer: &RustString) {

|

||||

let c_str_vec = filenames.iter().map(|cstring| cstring.as_ptr()).collect::<Vec<_>>();

|

||||

unsafe {

|

||||

llvm::LLVMRustCoverageWriteFilenamesSectionToBuffer(

|

||||

c_str_vec.as_ptr(),

|

||||

c_str_vec.len(),

|

||||

buffer,

|

||||

);

|

||||

}

|

||||

}

|

||||

|

||||

pub(crate) fn write_mapping_to_buffer(

|

||||

virtual_file_mapping: Vec<u32>,

|

||||

expressions: SmallVectorCounterExpression<'_>,

|

||||

mapping_regions: SmallVectorCounterMappingRegion<'_>,

|

||||

buffer: &RustString,

|

||||

) {

|

||||

unsafe {

|

||||

llvm::LLVMRustCoverageWriteMappingToBuffer(

|

||||

virtual_file_mapping.as_ptr(),

|

||||

virtual_file_mapping.len() as c_uint,

|

||||

expressions.as_ptr(),

|

||||

mapping_regions.as_ptr(),

|

||||

buffer,

|

||||

);

|

||||

}

|

||||

}

|

||||

|

||||

pub(crate) fn compute_hash(name: &str) -> u64 {

|

||||

let name = CString::new(name).expect("null error converting hashable name to C string");

|

||||

unsafe { llvm::LLVMRustCoverageComputeHash(name.as_ptr()) }

|

||||

}

|

||||

|

||||

pub(crate) fn mapping_version() -> u32 {

|

||||

unsafe { llvm::LLVMRustCoverageMappingVersion() }

|

||||

}

|

||||

|

||||

pub(crate) fn save_map_to_mod<'ll, 'tcx>(

|

||||

cx: &CodegenCx<'ll, 'tcx>,

|

||||

cov_data_val: &'ll llvm::Value,

|

||||

) {

|

||||

let covmap_var_name = llvm::build_string(|s| unsafe {

|

||||

llvm::LLVMRustCoverageWriteMappingVarNameToString(s);

|

||||

})

|

||||

.expect("Rust Coverage Mapping var name failed UTF-8 conversion");

|

||||

debug!("covmap var name: {:?}", covmap_var_name);

|

||||

|

||||

let covmap_section_name = llvm::build_string(|s| unsafe {

|

||||

llvm::LLVMRustCoverageWriteSectionNameToString(cx.llmod, s);

|

||||

})

|

||||

.expect("Rust Coverage section name failed UTF-8 conversion");

|

||||

debug!("covmap section name: {:?}", covmap_section_name);

|

||||

|

||||

let llglobal = llvm::add_global(cx.llmod, cx.val_ty(cov_data_val), &covmap_var_name);

|

||||

llvm::set_initializer(llglobal, cov_data_val);

|

||||

llvm::set_global_constant(llglobal, true);

|

||||

llvm::set_linkage(llglobal, llvm::Linkage::InternalLinkage);

|

||||

llvm::set_section(llglobal, &covmap_section_name);

|

||||

llvm::set_alignment(llglobal, COVMAP_VAR_ALIGN_BYTES);

|

||||

cx.add_used_global(llglobal);

|

||||

}

|

||||

|

||||

@ -90,45 +90,64 @@ impl IntrinsicCallMethods<'tcx> for Builder<'a, 'll, 'tcx> {

|

||||

args: &Vec<Operand<'tcx>>,

|

||||

caller_instance: ty::Instance<'tcx>,

|

||||

) -> bool {

|

||||

match intrinsic {

|

||||

sym::count_code_region => {

|

||||

use coverage::count_code_region_args::*;

|

||||

self.add_counter_region(

|

||||

caller_instance,

|

||||

op_to_u32(&args[COUNTER_INDEX]),

|

||||

op_to_u32(&args[START_BYTE_POS]),

|

||||

op_to_u32(&args[END_BYTE_POS]),

|

||||

);

|

||||

true // Also inject the counter increment in the backend

|

||||

if self.tcx.sess.opts.debugging_opts.instrument_coverage {

|

||||

// Add the coverage information from the MIR to the Codegen context. Some coverage

|

||||

// intrinsics are used only to pass along the coverage information (returns `false`

|

||||

// for `is_codegen_intrinsic()`), but `count_code_region` is also converted into an

|

||||

// LLVM intrinsic to increment a coverage counter.

|

||||

match intrinsic {

|

||||

sym::count_code_region => {

|

||||

use coverage::count_code_region_args::*;

|

||||

self.add_counter_region(

|

||||

caller_instance,

|

||||

op_to_u64(&args[FUNCTION_SOURCE_HASH]),

|

||||

op_to_u32(&args[COUNTER_INDEX]),

|

||||

op_to_u32(&args[START_BYTE_POS]),

|

||||

op_to_u32(&args[END_BYTE_POS]),

|

||||

);

|

||||

return true; // Also inject the counter increment in the backend

|

||||

}

|

||||

sym::coverage_counter_add | sym::coverage_counter_subtract => {

|

||||

use coverage::coverage_counter_expression_args::*;

|

||||

self.add_counter_expression_region(

|

||||

caller_instance,

|

||||

op_to_u32(&args[COUNTER_EXPRESSION_INDEX]),

|

||||

op_to_u32(&args[LEFT_INDEX]),

|

||||

if intrinsic == sym::coverage_counter_add {

|

||||

CounterOp::Add

|

||||

} else {

|

||||

CounterOp::Subtract

|

||||

},

|

||||

op_to_u32(&args[RIGHT_INDEX]),

|

||||

op_to_u32(&args[START_BYTE_POS]),

|

||||

op_to_u32(&args[END_BYTE_POS]),

|

||||

);

|

||||

return false; // Does not inject backend code

|

||||

}

|

||||

sym::coverage_unreachable => {

|

||||

use coverage::coverage_unreachable_args::*;

|

||||

self.add_unreachable_region(

|

||||

caller_instance,

|

||||

op_to_u32(&args[START_BYTE_POS]),

|

||||

op_to_u32(&args[END_BYTE_POS]),

|

||||

);

|

||||

return false; // Does not inject backend code

|

||||

}

|

||||

_ => {}

|

||||

}

|

||||

sym::coverage_counter_add | sym::coverage_counter_subtract => {

|

||||

use coverage::coverage_counter_expression_args::*;

|

||||

self.add_counter_expression_region(

|

||||

caller_instance,

|

||||

op_to_u32(&args[COUNTER_EXPRESSION_INDEX]),

|

||||

op_to_u32(&args[LEFT_INDEX]),

|

||||

if intrinsic == sym::coverage_counter_add {

|

||||

CounterOp::Add

|

||||

} else {

|

||||

CounterOp::Subtract

|

||||

},

|

||||

op_to_u32(&args[RIGHT_INDEX]),

|

||||

op_to_u32(&args[START_BYTE_POS]),

|

||||

op_to_u32(&args[END_BYTE_POS]),

|

||||

);

|

||||

false // Does not inject backend code

|

||||

} else {

|

||||

// NOT self.tcx.sess.opts.debugging_opts.instrument_coverage

|

||||

if intrinsic == sym::count_code_region {

|

||||

// An external crate may have been pre-compiled with coverage instrumentation, and

|

||||

// some references from the current crate to the external crate might carry along

|

||||

// the call terminators to coverage intrinsics, like `count_code_region` (for

|

||||

// example, when instantiating a generic function). If the current crate has

|

||||

// `instrument_coverage` disabled, the `count_code_region` call terminators should

|

||||

// be ignored.

|

||||

return false; // Do not inject coverage counters inlined from external crates

|

||||

}

|

||||

sym::coverage_unreachable => {

|

||||

use coverage::coverage_unreachable_args::*;

|

||||

self.add_unreachable_region(

|

||||

caller_instance,

|

||||

op_to_u32(&args[START_BYTE_POS]),

|

||||

op_to_u32(&args[END_BYTE_POS]),

|

||||

);

|

||||

false // Does not inject backend code

|

||||

}

|

||||

_ => true, // Unhandled intrinsics should be passed to `codegen_intrinsic_call()`

|

||||

}

|

||||

true // Unhandled intrinsics should be passed to `codegen_intrinsic_call()`

|

||||

}

|

||||

|

||||

fn codegen_intrinsic_call(

|

||||

@ -197,12 +216,13 @@ impl IntrinsicCallMethods<'tcx> for Builder<'a, 'll, 'tcx> {

|

||||

let coverageinfo = tcx.coverageinfo(caller_instance.def_id());

|

||||

let mangled_fn = tcx.symbol_name(caller_instance);

|

||||

let (mangled_fn_name, _len_val) = self.const_str(Symbol::intern(mangled_fn.name));

|

||||

let hash = self.const_u64(coverageinfo.hash);

|

||||

let num_counters = self.const_u32(coverageinfo.num_counters);

|

||||

use coverage::count_code_region_args::*;

|

||||

let hash = args[FUNCTION_SOURCE_HASH].immediate();

|

||||

let index = args[COUNTER_INDEX].immediate();

|

||||

debug!(

|

||||

"count_code_region to LLVM intrinsic instrprof.increment(fn_name={}, hash={:?}, num_counters={:?}, index={:?})",

|

||||

"translating Rust intrinsic `count_code_region()` to LLVM intrinsic: \

|

||||

instrprof.increment(fn_name={}, hash={:?}, num_counters={:?}, index={:?})",

|

||||

mangled_fn.name, hash, num_counters, index,

|

||||

);

|

||||

self.instrprof_increment(mangled_fn_name, hash, num_counters, index)

|

||||

@ -2222,3 +2242,7 @@ fn float_type_width(ty: Ty<'_>) -> Option<u64> {

|

||||

fn op_to_u32<'tcx>(op: &Operand<'tcx>) -> u32 {

|

||||

Operand::scalar_from_const(op).to_u32().expect("Scalar is u32")

|

||||

}

|

||||

|

||||

fn op_to_u64<'tcx>(op: &Operand<'tcx>) -> u64 {

|

||||

Operand::scalar_from_const(op).to_u64().expect("Scalar is u64")

|

||||

}

|

||||

|

||||

@ -1,6 +1,8 @@

|

||||

#![allow(non_camel_case_types)]

|

||||

#![allow(non_upper_case_globals)]

|

||||

|

||||

use super::coverageinfo::{SmallVectorCounterExpression, SmallVectorCounterMappingRegion};

|

||||

|

||||

use super::debuginfo::{

|

||||

DIArray, DIBasicType, DIBuilder, DICompositeType, DIDerivedType, DIDescriptor, DIEnumerator,

|

||||

DIFile, DIFlags, DIGlobalVariableExpression, DILexicalBlock, DINameSpace, DISPFlags, DIScope,

|

||||

@ -650,6 +652,16 @@ pub struct Linker<'a>(InvariantOpaque<'a>);

|

||||

pub type DiagnosticHandler = unsafe extern "C" fn(&DiagnosticInfo, *mut c_void);

|

||||

pub type InlineAsmDiagHandler = unsafe extern "C" fn(&SMDiagnostic, *const c_void, c_uint);

|

||||

|

||||

pub mod coverageinfo {

|

||||

use super::InvariantOpaque;

|

||||

|

||||

#[repr(C)]

|

||||

pub struct SmallVectorCounterExpression<'a>(InvariantOpaque<'a>);

|

||||

|

||||

#[repr(C)]

|

||||

pub struct SmallVectorCounterMappingRegion<'a>(InvariantOpaque<'a>);

|

||||

}

|

||||

|

||||

pub mod debuginfo {

|

||||

use super::{InvariantOpaque, Metadata};

|

||||

use bitflags::bitflags;

|

||||

@ -1365,7 +1377,7 @@ extern "C" {

|

||||

|

||||

// Miscellaneous instructions

|

||||

pub fn LLVMBuildPhi(B: &Builder<'a>, Ty: &'a Type, Name: *const c_char) -> &'a Value;

|

||||

pub fn LLVMRustGetInstrprofIncrementIntrinsic(M: &Module) -> &'a Value;

|

||||

pub fn LLVMRustGetInstrProfIncrementIntrinsic(M: &Module) -> &'a Value;

|

||||

pub fn LLVMRustBuildCall(

|

||||

B: &Builder<'a>,

|

||||

Fn: &'a Value,

|

||||

@ -1633,6 +1645,58 @@ extern "C" {

|

||||

ConstraintsLen: size_t,

|

||||

) -> bool;

|

||||

|

||||

pub fn LLVMRustCoverageSmallVectorCounterExpressionCreate()

|

||||

-> &'a mut SmallVectorCounterExpression<'a>;

|

||||

pub fn LLVMRustCoverageSmallVectorCounterExpressionDispose(

|

||||

Container: &'a mut SmallVectorCounterExpression<'a>,

|

||||

);

|

||||

pub fn LLVMRustCoverageSmallVectorCounterExpressionAdd(

|

||||

Container: &mut SmallVectorCounterExpression<'a>,

|

||||

Kind: rustc_codegen_ssa::coverageinfo::CounterOp,

|

||||

LeftIndex: c_uint,

|

||||

RightIndex: c_uint,

|

||||

);

|

||||

|

||||

pub fn LLVMRustCoverageSmallVectorCounterMappingRegionCreate()

|

||||

-> &'a mut SmallVectorCounterMappingRegion<'a>;

|

||||

pub fn LLVMRustCoverageSmallVectorCounterMappingRegionDispose(

|

||||

Container: &'a mut SmallVectorCounterMappingRegion<'a>,

|

||||

);

|

||||

pub fn LLVMRustCoverageSmallVectorCounterMappingRegionAdd(

|

||||

Container: &mut SmallVectorCounterMappingRegion<'a>,

|

||||

Index: c_uint,

|

||||

FileID: c_uint,

|

||||

LineStart: c_uint,

|

||||

ColumnStart: c_uint,

|

||||

LineEnd: c_uint,

|

||||

ColumnEnd: c_uint,

|

||||

);

|

||||

|

||||

#[allow(improper_ctypes)]

|

||||

pub fn LLVMRustCoverageWriteFilenamesSectionToBuffer(

|

||||

Filenames: *const *const c_char,

|

||||

FilenamesLen: size_t,

|

||||

BufferOut: &RustString,

|

||||

);

|

||||

|

||||

#[allow(improper_ctypes)]

|

||||

pub fn LLVMRustCoverageWriteMappingToBuffer(

|

||||

VirtualFileMappingIDs: *const c_uint,

|

||||

NumVirtualFileMappingIDs: c_uint,

|

||||

Expressions: *const SmallVectorCounterExpression<'_>,

|

||||

MappingRegions: *const SmallVectorCounterMappingRegion<'_>,

|

||||

BufferOut: &RustString,

|

||||

);

|

||||

|

||||

pub fn LLVMRustCoverageComputeHash(Name: *const c_char) -> u64;

|

||||

|

||||

#[allow(improper_ctypes)]

|

||||

pub fn LLVMRustCoverageWriteSectionNameToString(M: &Module, Str: &RustString);

|

||||

|

||||

#[allow(improper_ctypes)]

|

||||

pub fn LLVMRustCoverageWriteMappingVarNameToString(Str: &RustString);

|

||||

|

||||

pub fn LLVMRustCoverageMappingVersion() -> u32;

|

||||

pub fn LLVMRustDebugMetadataVersion() -> u32;

|

||||

pub fn LLVMRustVersionMajor() -> u32;

|

||||

pub fn LLVMRustVersionMinor() -> u32;

|

||||

|

||||

@ -12,7 +12,7 @@ use libc::c_uint;

|

||||

use rustc_data_structures::small_c_str::SmallCStr;

|

||||

use rustc_llvm::RustString;

|

||||

use std::cell::RefCell;

|

||||

use std::ffi::CStr;

|

||||

use std::ffi::{CStr, CString};

|

||||

use std::str::FromStr;

|

||||

use std::string::FromUtf8Error;

|

||||

|

||||

@ -189,6 +189,42 @@ pub fn mk_section_iter(llof: &ffi::ObjectFile) -> SectionIter<'_> {

|

||||

unsafe { SectionIter { llsi: LLVMGetSections(llof) } }

|

||||

}

|

||||

|

||||

pub fn set_section(llglobal: &Value, section_name: &str) {

|

||||

let section_name_cstr = CString::new(section_name).expect("unexpected CString error");

|

||||

unsafe {

|

||||

LLVMSetSection(llglobal, section_name_cstr.as_ptr());

|

||||

}

|

||||

}

|

||||

|

||||

pub fn add_global<'a>(llmod: &'a Module, ty: &'a Type, name: &str) -> &'a Value {

|

||||

let name_cstr = CString::new(name).expect("unexpected CString error");

|

||||

unsafe { LLVMAddGlobal(llmod, ty, name_cstr.as_ptr()) }

|

||||

}

|

||||

|

||||

pub fn set_initializer(llglobal: &Value, constant_val: &Value) {

|

||||

unsafe {

|

||||

LLVMSetInitializer(llglobal, constant_val);

|

||||

}

|

||||

}

|

||||

|

||||

pub fn set_global_constant(llglobal: &Value, is_constant: bool) {

|

||||

unsafe {

|

||||

LLVMSetGlobalConstant(llglobal, if is_constant { ffi::True } else { ffi::False });

|

||||

}

|

||||

}

|

||||

|

||||

pub fn set_linkage(llglobal: &Value, linkage: Linkage) {

|

||||

unsafe {

|

||||

LLVMRustSetLinkage(llglobal, linkage);

|

||||

}

|

||||

}

|

||||

|

||||

pub fn set_alignment(llglobal: &Value, bytes: usize) {

|

||||

unsafe {

|

||||

ffi::LLVMSetAlignment(llglobal, bytes as c_uint);

|

||||

}

|

||||

}

|

||||

|

||||

/// Safe wrapper around `LLVMGetParam`, because segfaults are no fun.

|

||||

pub fn get_param(llfn: &Value, index: c_uint) -> &Value {

|

||||

unsafe {

|

||||

@ -225,6 +261,12 @@ pub fn build_string(f: impl FnOnce(&RustString)) -> Result<String, FromUtf8Error

|

||||

String::from_utf8(sr.bytes.into_inner())

|

||||

}

|

||||

|

||||

pub fn build_byte_buffer(f: impl FnOnce(&RustString)) -> Vec<u8> {

|

||||

let sr = RustString { bytes: RefCell::new(Vec::new()) };

|

||||

f(&sr);

|

||||

sr.bytes.into_inner()

|

||||

}

|

||||

|

||||

pub fn twine_to_string(tr: &Twine) -> String {

|

||||

unsafe {

|

||||

build_string(|s| LLVMRustWriteTwineToString(tr, s)).expect("got a non-UTF8 Twine from LLVM")

|

||||

|

||||

@ -1659,7 +1659,7 @@ fn linker_with_args<'a, B: ArchiveBuilder<'a>>(

|

||||

// FIXME: Order dependent, applies to the following objects. Where should it be placed?

|

||||

// Try to strip as much out of the generated object by removing unused

|

||||

// sections if possible. See more comments in linker.rs

|

||||

if !sess.opts.cg.link_dead_code {

|

||||

if sess.opts.cg.link_dead_code != Some(true) {

|

||||

let keep_metadata = crate_type == CrateType::Dylib;

|

||||

cmd.gc_sections(keep_metadata);

|

||||

}

|

||||

@ -1695,7 +1695,7 @@ fn linker_with_args<'a, B: ArchiveBuilder<'a>>(

|

||||

);

|

||||

|

||||

// OBJECT-FILES-NO, AUDIT-ORDER

|

||||

if sess.opts.cg.profile_generate.enabled() {

|

||||

if sess.opts.cg.profile_generate.enabled() || sess.opts.debugging_opts.instrument_coverage {

|

||||

cmd.pgo_gen();

|

||||

}

|

||||

|

||||

|

||||

@ -203,6 +203,17 @@ fn exported_symbols_provider_local(

|

||||

}));

|

||||

}

|

||||

|

||||

if tcx.sess.opts.debugging_opts.instrument_coverage {

|

||||

// Similar to PGO profiling, preserve symbols used by LLVM InstrProf coverage profiling.

|

||||

const COVERAGE_WEAK_SYMBOLS: [&str; 3] =

|

||||

["__llvm_profile_filename", "__llvm_coverage_mapping", "__llvm_covmap"];

|

||||

|

||||

symbols.extend(COVERAGE_WEAK_SYMBOLS.iter().map(|sym| {

|

||||

let exported_symbol = ExportedSymbol::NoDefId(SymbolName::new(tcx, sym));

|

||||

(exported_symbol, SymbolExportLevel::C)

|

||||

}));

|

||||

}

|

||||

|

||||

if tcx.sess.opts.debugging_opts.sanitizer.contains(SanitizerSet::MEMORY) {

|

||||

// Similar to profiling, preserve weak msan symbol during LTO.

|

||||

const MSAN_WEAK_SYMBOLS: [&str; 2] = ["__msan_track_origins", "__msan_keep_going"];

|

||||

|

||||

@ -1,32 +1,154 @@

|

||||

use rustc_data_structures::fx::FxHashMap;

|

||||

use std::collections::hash_map;

|

||||

use std::slice;

|

||||

use rustc_data_structures::sync::Lrc;

|

||||

use rustc_middle::mir;

|

||||

use rustc_span::source_map::{Pos, SourceFile, SourceMap};

|

||||

use rustc_span::{BytePos, FileName, RealFileName};

|

||||

|

||||

use std::cmp::{Ord, Ordering};

|

||||

use std::collections::BTreeMap;

|

||||

use std::fmt;

|

||||

use std::path::PathBuf;

|

||||

|

||||

#[derive(Copy, Clone, Debug)]

|

||||

#[repr(C)]

|

||||

pub enum CounterOp {

|

||||

Add,

|

||||

// Note the order (and therefore the default values) is important. With the attribute

|

||||

// `#[repr(C)]`, this enum matches the layout of the LLVM enum defined for the nested enum,

|

||||

// `llvm::coverage::CounterExpression::ExprKind`, as shown in the following source snippet:

|

||||

// https://github.com/rust-lang/llvm-project/blob/f208b70fbc4dee78067b3c5bd6cb92aa3ba58a1e/llvm/include/llvm/ProfileData/Coverage/CoverageMapping.h#L146

|

||||

Subtract,

|

||||

Add,

|

||||

}

|

||||

|

||||

#[derive(Copy, Clone, Debug)]

|

||||

pub enum CoverageKind {

|

||||

Counter,

|

||||

CounterExpression(u32, CounterOp, u32),

|

||||

Unreachable,

|

||||

}

|

||||

|

||||

pub struct CoverageSpan {

|

||||

#[derive(Clone, Debug)]

|

||||

pub struct CoverageRegion {

|

||||

pub kind: CoverageKind,

|

||||

pub start_byte_pos: u32,

|

||||

pub end_byte_pos: u32,

|

||||

}

|

||||

|

||||

pub struct CoverageRegion {

|

||||

pub kind: CoverageKind,

|

||||

pub coverage_span: CoverageSpan,

|

||||

impl CoverageRegion {

|

||||

pub fn source_loc(&self, source_map: &SourceMap) -> Option<(Lrc<SourceFile>, CoverageLoc)> {

|

||||

let (start_file, start_line, start_col) =

|

||||

lookup_file_line_col(source_map, BytePos::from_u32(self.start_byte_pos));

|

||||

let (end_file, end_line, end_col) =

|

||||

lookup_file_line_col(source_map, BytePos::from_u32(self.end_byte_pos));

|

||||

let start_file_path = match &start_file.name {

|

||||

FileName::Real(RealFileName::Named(path)) => path,

|

||||

_ => {

|

||||

bug!("start_file_path should be a RealFileName, but it was: {:?}", start_file.name)

|

||||

}

|

||||

};

|

||||

let end_file_path = match &end_file.name {

|

||||

FileName::Real(RealFileName::Named(path)) => path,

|

||||

_ => bug!("end_file_path should be a RealFileName, but it was: {:?}", end_file.name),

|

||||

};

|

||||

if start_file_path == end_file_path {

|

||||

Some((start_file, CoverageLoc { start_line, start_col, end_line, end_col }))

|

||||

} else {

|

||||

None

|

||||

// FIXME(richkadel): There seems to be a problem computing the file location in

|

||||

// some cases. I need to investigate this more. When I generate and show coverage

|

||||

// for the example binary in the crates.io crate `json5format`, I had a couple of

|

||||

// notable problems:

|

||||

//

|

||||

// 1. I saw a lot of coverage spans in `llvm-cov show` highlighting regions in

|

||||

// various comments (not corresponding to rustdoc code), indicating a possible

|

||||

// problem with the byte_pos-to-source-map implementation.

|

||||

//

|

||||

// 2. And (perhaps not related) when I build the aforementioned example binary with:

|

||||

// `RUST_FLAGS="-Zinstrument-coverage" cargo build --example formatjson5`

|

||||

// and then run that binary with

|

||||

// `LLVM_PROFILE_FILE="formatjson5.profraw" ./target/debug/examples/formatjson5 \

|

||||

// some.json5` for some reason the binary generates *TWO* `.profraw` files. One

|

||||

// named `default.profraw` and the other named `formatjson5.profraw` (the expected

|

||||

// name, in this case).

|

||||

//

|

||||

// If the byte range conversion is wrong, fix it. But if it

|

||||

// is right, then it is possible for the start and end to be in different files.

|

||||

// Can I do something other than ignore coverages that span multiple files?

|

||||

//

|

||||

// If I can resolve this, remove the "Option<>" result type wrapper

|

||||

// `regions_in_file_order()` accordingly.

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl Default for CoverageRegion {

|

||||

fn default() -> Self {

|

||||

Self {

|

||||

// The default kind (Unreachable) is a placeholder that will be overwritten before

|

||||

// backend codegen.

|

||||

kind: CoverageKind::Unreachable,

|

||||

start_byte_pos: 0,

|

||||

end_byte_pos: 0,

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

/// A source code region used with coverage information.

|

||||

#[derive(Debug, Eq, PartialEq)]

|

||||

pub struct CoverageLoc {

|

||||

/// The (1-based) line number of the region start.

|

||||

pub start_line: u32,

|

||||

/// The (1-based) column number of the region start.

|

||||

pub start_col: u32,

|

||||

/// The (1-based) line number of the region end.

|

||||

pub end_line: u32,

|

||||

/// The (1-based) column number of the region end.

|

||||

pub end_col: u32,

|

||||

}

|

||||

|

||||

impl Ord for CoverageLoc {

|

||||

fn cmp(&self, other: &Self) -> Ordering {

|

||||

(self.start_line, &self.start_col, &self.end_line, &self.end_col).cmp(&(

|

||||

other.start_line,

|

||||

&other.start_col,

|

||||

&other.end_line,

|

||||

&other.end_col,

|

||||

))

|

||||

}

|

||||

}

|

||||

|

||||

impl PartialOrd for CoverageLoc {

|

||||

fn partial_cmp(&self, other: &Self) -> Option<Ordering> {

|

||||

Some(self.cmp(other))

|

||||

}

|

||||

}

|

||||

|

||||

impl fmt::Display for CoverageLoc {

|

||||

fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

|

||||

// Customize debug format, and repeat the file name, so generated location strings are

|

||||

// "clickable" in many IDEs.

|

||||

write!(f, "{}:{} - {}:{}", self.start_line, self.start_col, self.end_line, self.end_col)

|

||||

}

|

||||

}

|

||||

|

||||

fn lookup_file_line_col(source_map: &SourceMap, byte_pos: BytePos) -> (Lrc<SourceFile>, u32, u32) {

|

||||

let found = source_map

|

||||

.lookup_line(byte_pos)

|

||||

.expect("should find coverage region byte position in source");

|

||||

let file = found.sf;

|

||||

let line_pos = file.line_begin_pos(byte_pos);

|

||||

|

||||

// Use 1-based indexing.

|

||||

let line = (found.line + 1) as u32;

|

||||

let col = (byte_pos - line_pos).to_u32() + 1;

|

||||

|

||||

(file, line, col)

|

||||

}

|

||||

|

||||

/// Collects all of the coverage regions associated with (a) injected counters, (b) counter

|

||||

/// expressions (additions or subtraction), and (c) unreachable regions (always counted as zero),

|

||||

/// for a given Function. Counters and counter expressions are indexed because they can be operands

|

||||

/// in an expression.

|

||||

/// in an expression. This struct also stores the `function_source_hash`, computed during

|

||||

/// instrumentation and forwarded with counters.

|

||||

///

|

||||

/// Note, it's important to distinguish the `unreachable` region type from what LLVM's refers to as

|

||||

/// a "gap region" (or "gap area"). A gap region is a code region within a counted region (either

|

||||

@ -34,50 +156,134 @@ pub struct CoverageRegion {

|

||||

/// lines with only whitespace or comments). According to LLVM Code Coverage Mapping documentation,

|

||||

/// "A count for a gap area is only used as the line execution count if there are no other regions

|

||||

/// on a line."

|

||||

#[derive(Default)]

|

||||

pub struct FunctionCoverageRegions {

|

||||

indexed: FxHashMap<u32, CoverageRegion>,

|

||||

unreachable: Vec<CoverageSpan>,

|

||||

pub struct FunctionCoverage {

|

||||

source_hash: u64,

|

||||

counters: Vec<CoverageRegion>,

|

||||

expressions: Vec<CoverageRegion>,

|

||||

unreachable: Vec<CoverageRegion>,

|

||||

translated: bool,

|

||||

}

|

||||

|

||||

impl FunctionCoverageRegions {

|

||||

pub fn add_counter(&mut self, index: u32, start_byte_pos: u32, end_byte_pos: u32) {

|

||||

self.indexed.insert(

|

||||

index,

|

||||

CoverageRegion {

|

||||

kind: CoverageKind::Counter,

|

||||

coverage_span: CoverageSpan { start_byte_pos, end_byte_pos },

|

||||

},

|

||||

);

|

||||

impl FunctionCoverage {

|

||||

pub fn with_coverageinfo<'tcx>(coverageinfo: &'tcx mir::CoverageInfo) -> Self {

|

||||

Self {

|

||||

source_hash: 0, // will be set with the first `add_counter()`

|

||||

counters: vec![CoverageRegion::default(); coverageinfo.num_counters as usize],

|

||||

expressions: vec![CoverageRegion::default(); coverageinfo.num_expressions as usize],

|

||||

unreachable: Vec::new(),

|

||||

translated: false,

|

||||

}

|

||||

}

|

||||

|

||||

/// Adds a code region to be counted by an injected counter intrinsic. Return a counter ID

|

||||

/// for the call.

|

||||

pub fn add_counter(

|

||||

&mut self,

|

||||

source_hash: u64,

|

||||

index: u32,

|

||||

start_byte_pos: u32,

|

||||

end_byte_pos: u32,

|

||||

) {

|

||||

self.source_hash = source_hash;

|

||||

self.counters[index as usize] =

|

||||

CoverageRegion { kind: CoverageKind::Counter, start_byte_pos, end_byte_pos };

|

||||

}

|

||||

|

||||

pub fn add_counter_expression(

|

||||

&mut self,

|

||||

index: u32,

|

||||

translated_index: u32,

|

||||

lhs: u32,

|

||||

op: CounterOp,

|

||||

rhs: u32,

|

||||

start_byte_pos: u32,

|

||||

end_byte_pos: u32,

|

||||

) {

|

||||

self.indexed.insert(

|

||||

index,

|

||||

CoverageRegion {

|

||||

kind: CoverageKind::CounterExpression(lhs, op, rhs),

|

||||

coverage_span: CoverageSpan { start_byte_pos, end_byte_pos },

|

||||

},

|

||||

);

|

||||

let index = u32::MAX - translated_index;

|

||||

// Counter expressions start with "translated indexes", descending from `u32::MAX`, so

|

||||

// the range of expression indexes is disjoint from the range of counter indexes. This way,

|

||||

// both counters and expressions can be operands in other expressions.

|

||||

//

|

||||

// Once all counters have been added, the final "region index" for an expression is

|

||||

// `counters.len() + expression_index` (where `expression_index` is its index in

|

||||

// `self.expressions`), and the expression operands (`lhs` and `rhs`) can be converted to

|

||||

// final "region index" references by the same conversion, after subtracting from

|

||||

// `u32::MAX`.

|

||||

self.expressions[index as usize] = CoverageRegion {

|

||||

kind: CoverageKind::CounterExpression(lhs, op, rhs),

|

||||

start_byte_pos,

|

||||

end_byte_pos,

|

||||

};

|

||||

}

|

||||

|

||||

pub fn add_unreachable(&mut self, start_byte_pos: u32, end_byte_pos: u32) {

|